Our news

A new collaboration is set to transform healthcare accessibility with the launch of a multilingual AI clinical assistant which will break down language barriers in healthcare, empowering patients to receive care in their preferred language.

Dr Tian Gan joins the CfAA as our newest Research Associate. In this blog she shares a little on her background and her focus for future work at the centre.

The CfAA has been awarded new funding to identify how Frontier AI could be safely deployed within fully autonomous driving systems.

The Centre for Assuring Autonomy has published a comprehensive approach to safety cases for the assurance of AI and autonomous systems.

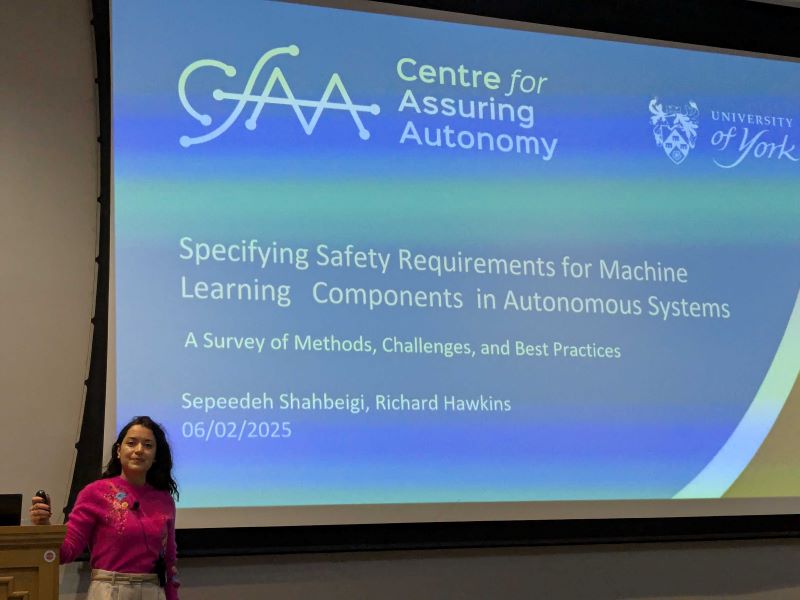

York was the location for 2025’s Safety Critical Systems Symposium and the CfAA was well represented with five speakers including two keynotes covering a breadth of safety-related topics.

The AI Action Summit in Paris was a far-reaching and broad look at the breathtaking advance of AI in our world. Across the five days of events around the common theme of the benefits and risks of Frontier AI it became clear that safety – in all its forms – is a global challenge with many different facets. Here our director, Professor John McDermid OBE FREng, shares his insights as both a guest and participant in the summit.

.jpg)

A new Centre of Excellence for Regulatory Science and Innovation in AI & Digital Health Technologies (CERSI-AI) will support regulators by balancing innovation with the safe and responsible deployment of AI in healthcare.

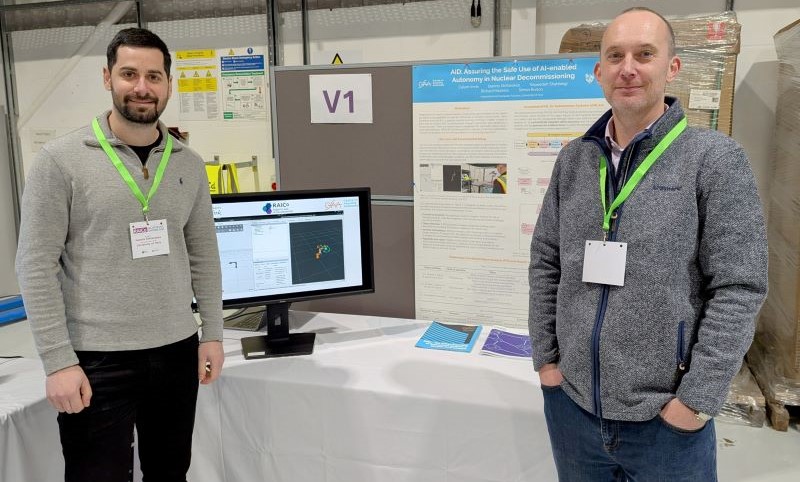

The Centre for Assuring Autonomy has demonstrated its work in the safety assurance of a robotic arm to support safer nuclear decommissioning at a showcase for regulators and industry professionals.

Students have gained real-world experience in academic research in safe AI and autonomous systems during intern placements at the Centre for Assuring Autonomy (CfAA).

(1).jpg)

The government’s long-awaited AI Opportunities Action Plan places a welcome emphasis on the potential benefits of AI including the ability to foster growth and to provide better public services.

In our latest white paper we look at a growing trend in discussions around risks in AI models - that of guardrails.

The latest report from the Centre for Assuring Autonomy (CfAA) has found increased confidence in the safety assurance of autonomous processes across the maritime industry.

The Centre for Assuring Autonomy has welcomed four new researchers from a cross section of disciplines.

The Assuring Autonomy International Programme (AAIP) collaborates with the Alan Turing Institute to develop a technique for the ethical assurance of data-driven technologies.

As the campaigning ramps up ahead of the General Election we look at what might happen to the future of safe and responsible AI.

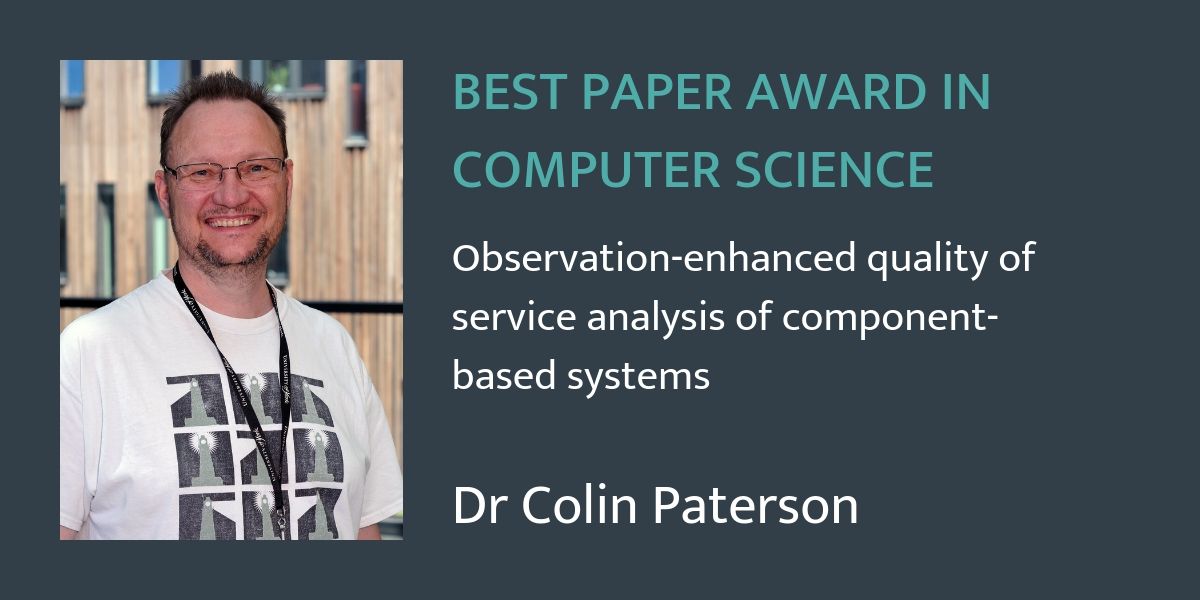

Academics and researchers from the Centre for Assuring Autonomy have received two notable awards for recent papers at separate conferences.

In March we held our first workshop focused on introducing maritime autonomy and the challenges of safety and assurance. The workshop identified ways to introduce and build a safe maritime autonomy ecosystem which have been put together in a new report.

.jpg)

Centre for Assuring Autonomy Director Professor John McDermid has been selected to join the Department for Transport’s (DfT) College of Experts.

The new Centre for Assuring Autonomy, a partnership between Lloyd’s Register Foundation and the University of York, has launched to address the pressing challenges around safety which are preventing society from unlocking the full benefits of AI and autonomy.

NATS Research and Development (R&D) team and the Assuring Autonomy International Programme, a partnership between the University of York and Lloyd’s Register Foundation, have teamed up on a project to research the assurance for safety of automated decision-making tools in the complexity of an air traffic control environment.

The University’s UKRI-funded AI centre for doctoral training in Lifelong Safety Assurance of AI-enabled Autonomous Systems (SAINTS CDT) has opened applications for its first cohort of PhD students.

The University of York is one of 16 universities to receive funding for a new Doctoral Training Centre in artificial intelligence (AI) to train the next generation of researchers and innovators.

Ahead of the upcoming AI Safety Summit, we set out three key approaches which we believe will help regulators and policy makers work towards the goal of answering the big question: 'is it safe?'.

New network membership to support the University’s leading research in safe and responsible artificial intelligence (AI) systems.

.png)

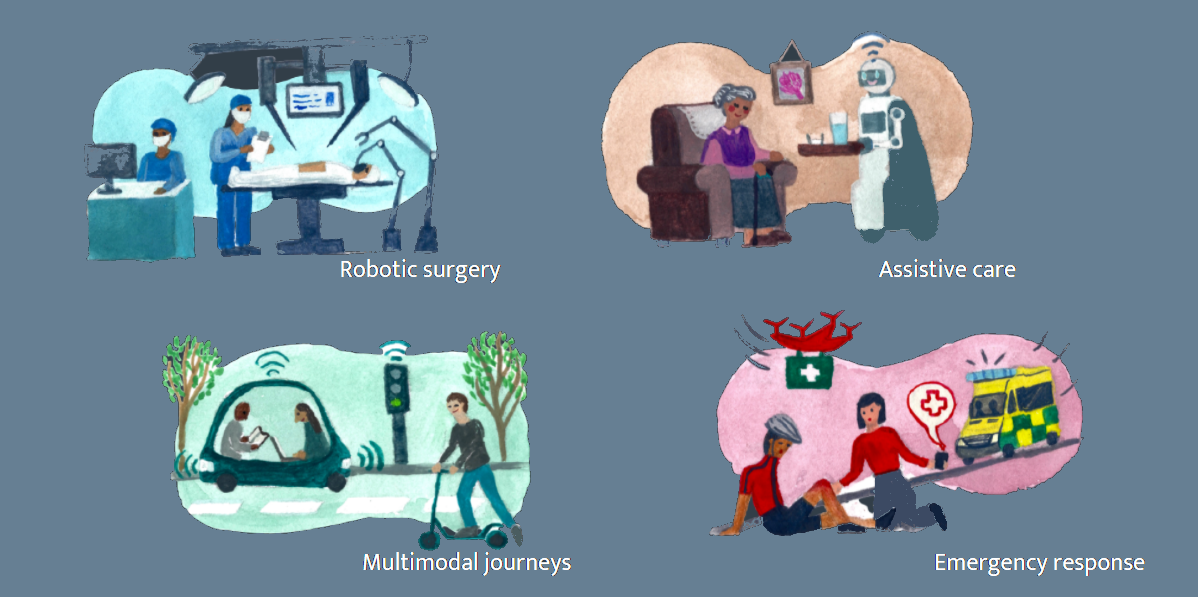

Researchers at the Universities of York and Sheffield worked with disabled young people to reimagine autonomous systems so they can enhance the lives of everyone.

(1200 Ã 600px).png)

Two leading experts from the Fraunhofer IKS and the University of York in the safety of complex systems have published a paper proposing a new approach to assure the safety of cognitive cyber-physical systems (CPS), including automated driving systems.

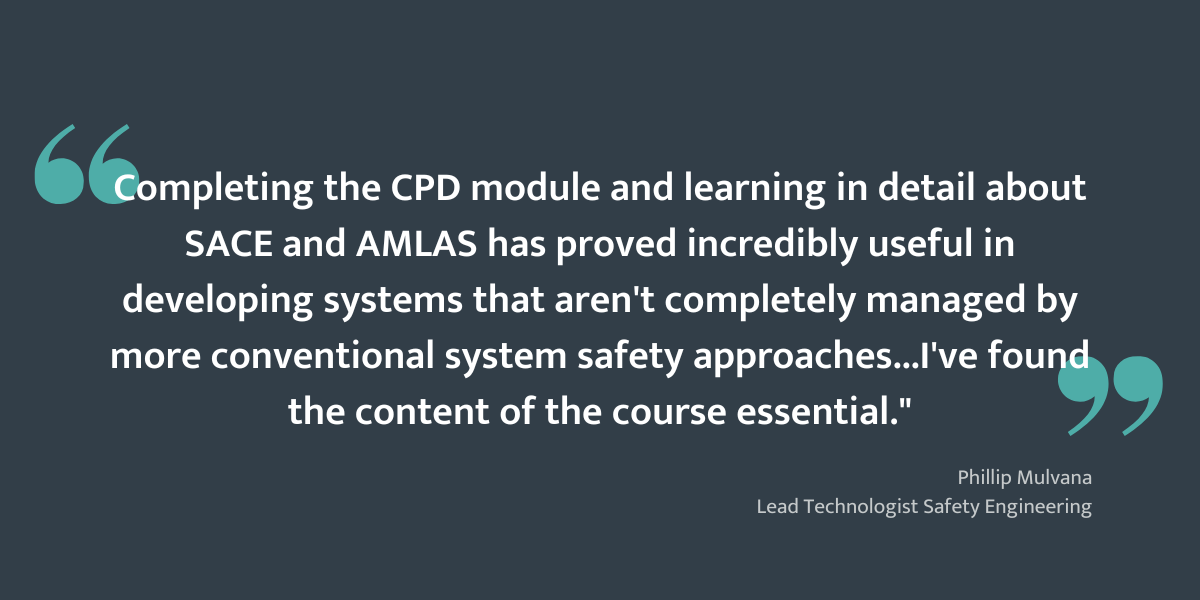

The AAIP MSc module is the only one dedicated to the safety assurance of autonomy and machine learning

Our Year in review 2022 considers how our assuring autonomy community are guiding the safe development of autonomous systems across the globe

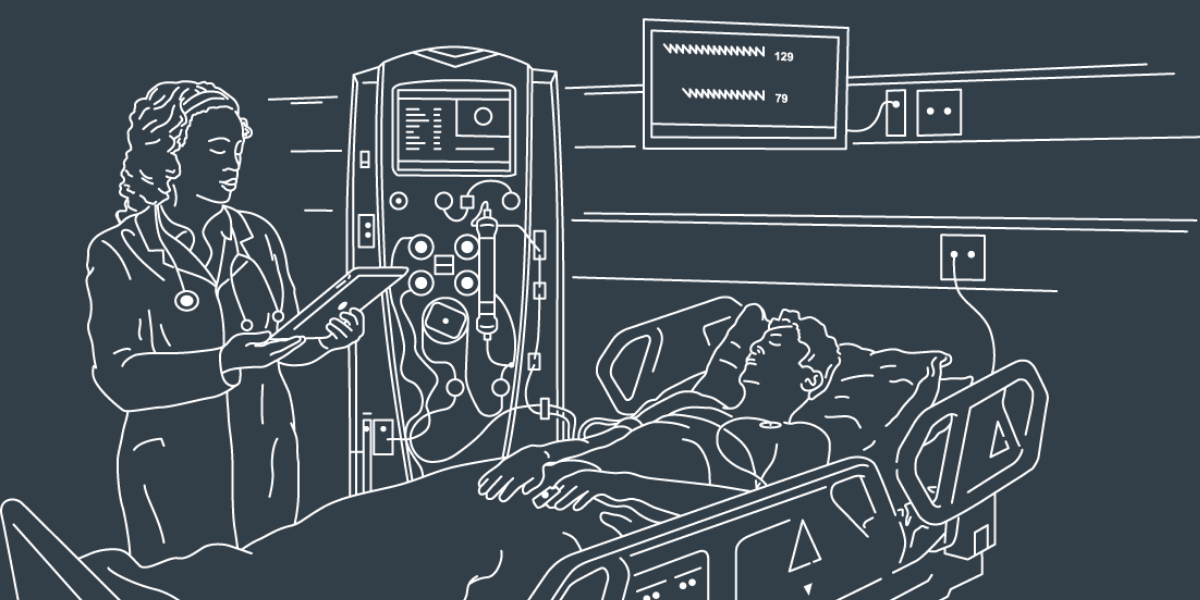

Balancing the technical and ethical sides of assuring the safety of AI in the complex world of healthcare

.png)

Dr Colin Paterson tells us how the experiences and aspirations of young people with complex needs are being used in research into trustworthy autonomous systems

Professor Farah Magrabi, an AAIP Programme Fellow, has been appointed as one of Australia’s representatives on the Global Partnership for AI (GPAI)

Funding from MPS Foundation awarded to new AI in healthcare project

Public consultation for BSI British standard: BS 30440 Validation framework for the use of AI in healthcare is launched

.png)

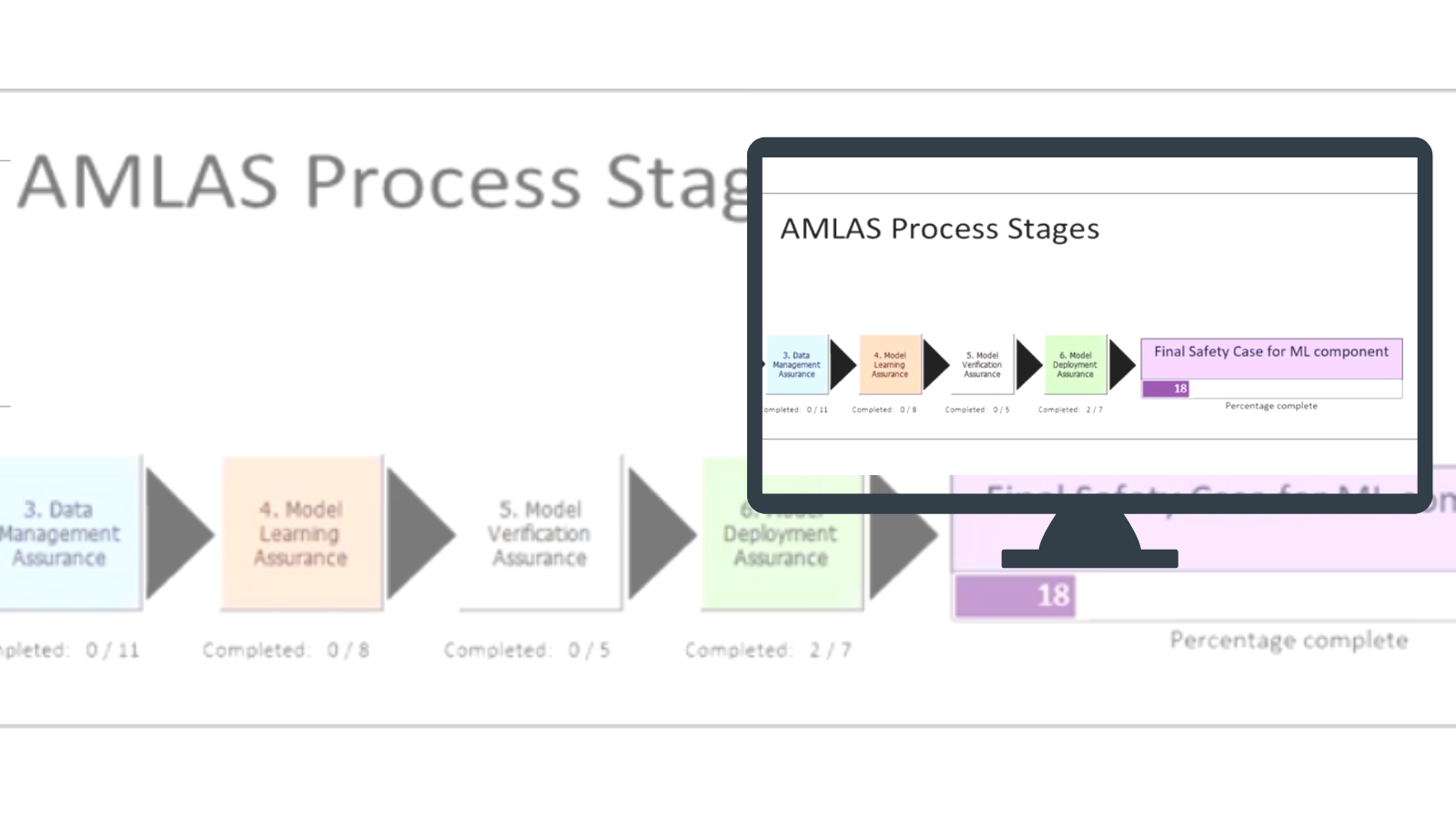

If you've used our AMLAS guidance please give us your feedback and support our work to provide more expert safety assurance guidance.

The Centre for Data Ethics and Innovation (CDEI) published “Responsible Innovation in Self-Driving Vehicles” this month, featuring recommendations shaped by the AAIP’s Director, Professor John McDermid, and Professor Jack Stilgoe of UCL

.png)

Safety experts from the Assuring Autonomy International Programme have published their latest guidance to support system developers, safety engineers, and assessors to design and introduce safe autonomous systems.

A partnership conference from AAIP and NHS Digital to bring delegates up-to-date with technology developments in healthcare, how the safety of these systems is being assured, and how ethics and governance are being considered.

The exhibit, developed by the Assuring Autonomy International Programme (AAIP) and the National Railway Museum, will explore how autonomous technologies could help shape the future of the railways.

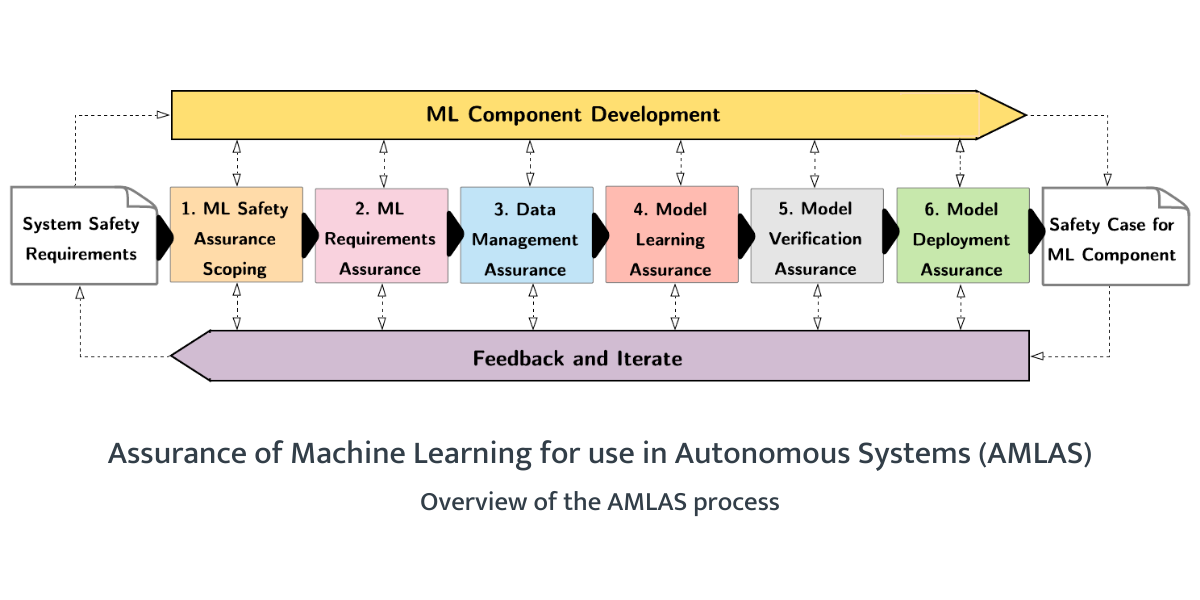

New tool will help machine learning developers and safety engineers create a safety case for ML components

AAIP report presents two new ontological models to support work to identify all of the risks associated with the introduction of autonomous vehicles

A free conference and workshop organised by NHS Digital and the AAIP for clinicians and those working in healthcare IT

The Call for Papers is now out for the 3rd international Dynamic Risk managEment for AutonoMous Systems (DREAMS) workshop

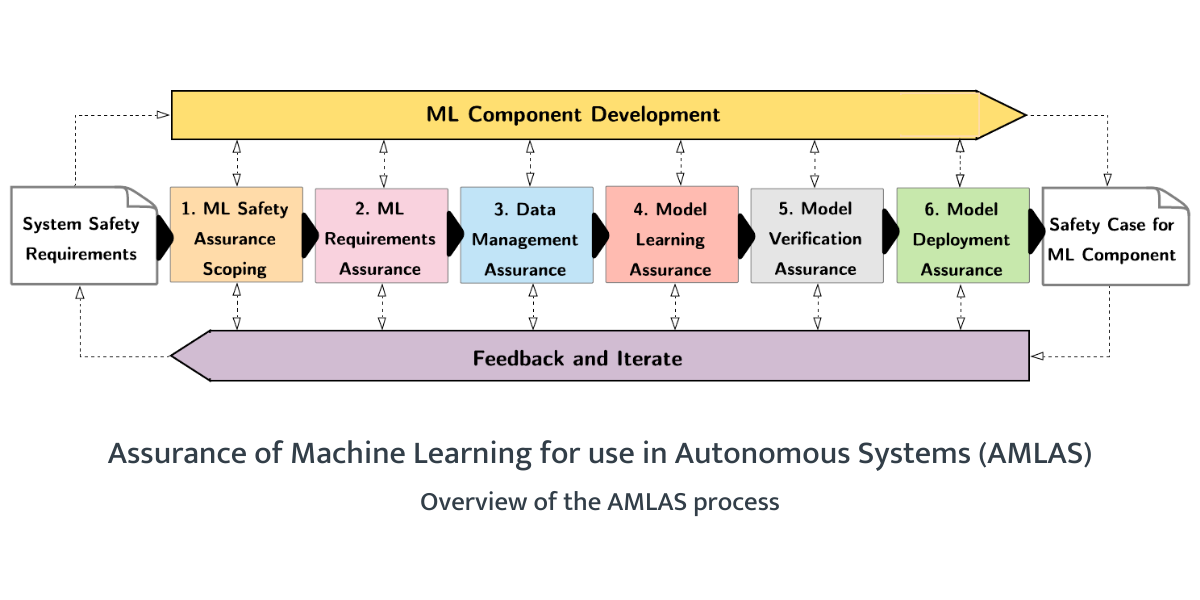

Safety experts at the University of York develop first standardised procedure to help assure the safety of robots, delivery drones, self-driving cars, or any products that use machine learning

Two new projects involving AAIP researchers have been awarded almost £300k of pump-priming funding from the UKRI Trustworthy Autonomous Systems programme.

We are delighted to announce the launch of our new guidance website

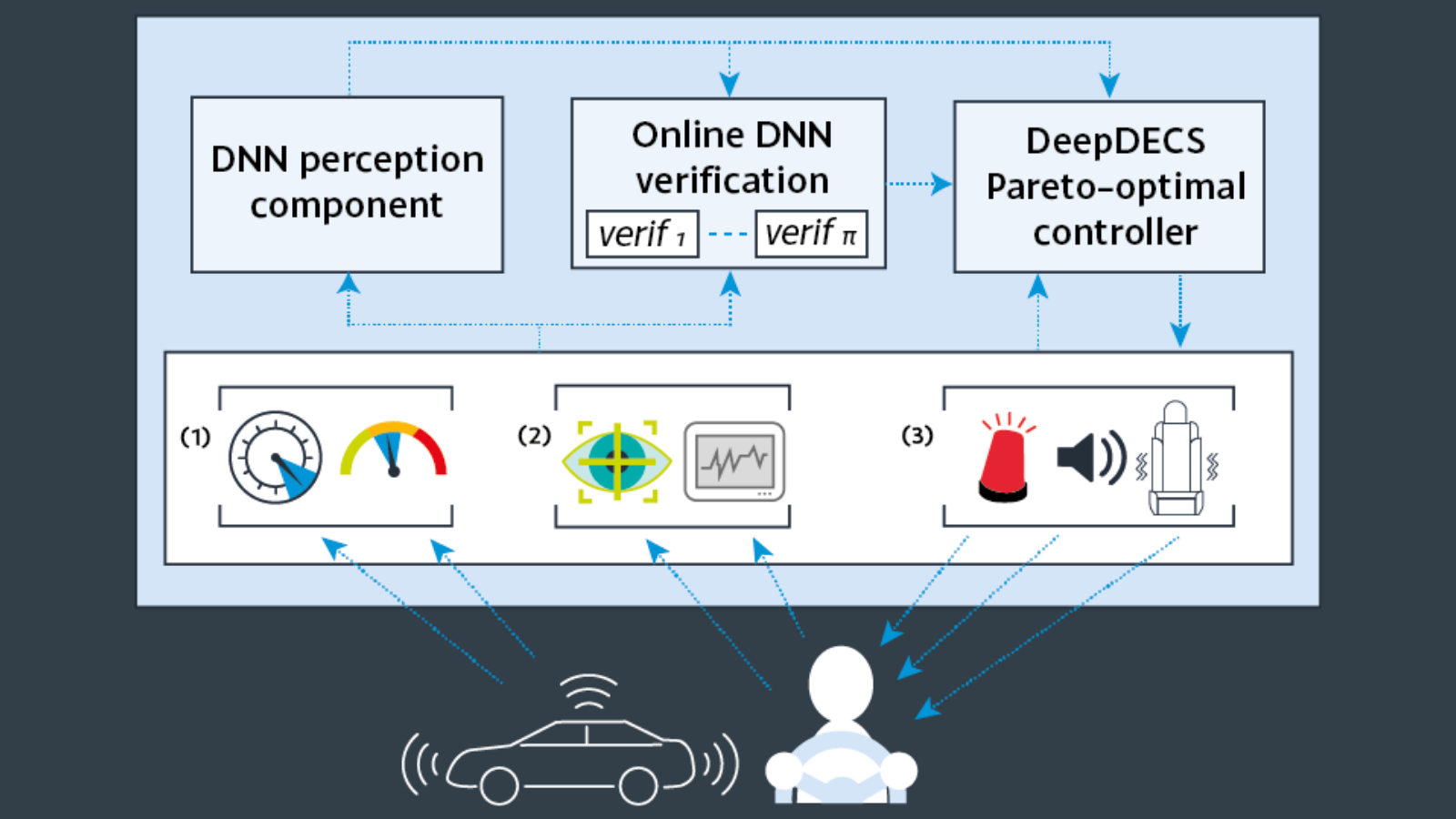

DeepDECS is a new method for the synthesis of correct-by-construction discrete-event controllers for autonomous systems that use deep neural network classifiers for the perception step of their decision-making processes

Our Year in review 2021 considers how our assuring autonomy team and community are guiding the safe development of autonomous systems through the translation of our significant body of research into guidance and training

AAIP demonstrator project leads to a new report highlighting the regulatory and legal barriers that need to be removed to enable the safe operation of remote-controlled and autonomous ships in UK waters

SAM project article one of BMJ Health & Care Informatics top ten most-cited papers published in 2019

An article from the SAM demonstrator project has been named one of the top ten most-cited papers published in BMJ Health & Care Informatics in 2019.

University of York academics have been awarded £700,000 to develop a legal and moral framework for tracing and allocating responsibility behind autonomous systems, such as driverless cars or medical systems.

Register now for a free conference discussing the safe development and utilisation of AI in healthcare

Richard Hawkins discusses the safety of self-driving cars with Dr Claire Asher

Online collaboration for this year's Manufacturing Robotics Challenge

AAIP demonstrator project leads to new white paper setting out a human factors perspective on the use of artificial intelligence (AI) applications in healthcare

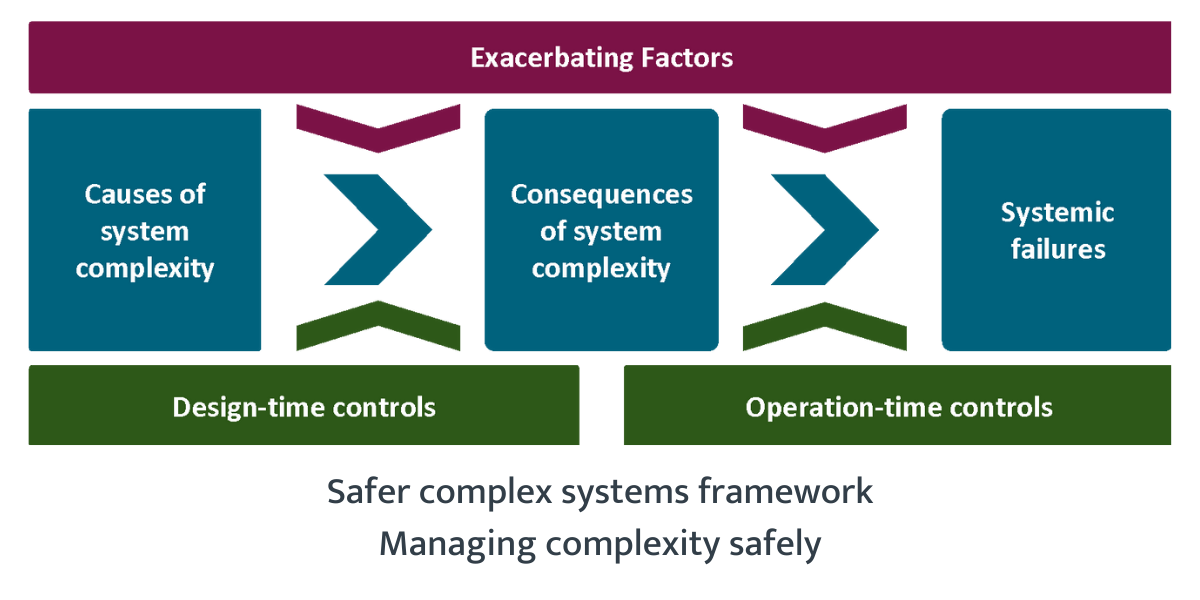

Two reports, authored and co-authored by the University of York and published this week, highlight the increasing complexity of systems that are part of our everyday lives, and propose a framework that can help us to safely manage this increasing complexity and interconnectedness.

Dr Radu Calinescu was a joint editor of this special issue of Elsevier's Journal of systems and software

Read all the latest news and updates in the May 2021 AAIP email newsletter

This new book chapter describes the application of the Functional Resonance Analysis Method to study performance variability and trade-offs in an intensive care unit.

This new special issue is on resilient software and software‑controlled systems and was edited by Radu Calinescu and Felicita Di Giandomenico

AAIP’s Dr Mark Nicholson was part of the steering group which developed this standard.

In our latest CPD module, you will consider the challenges posed to safety engineering techniques and practice by robotics and autonomous systems.

New AAIP guidance presents a methodology for the Assurance of Machine Learning for use in Autonomous Systems (AMLAS).

Our 2020 annual review considers an unusual year, but one in which we have accomplished much of what we planned and achieved additional successes along the way.

Ahead of the launch of our Year in Review 2020, we’ve taken a look back at a somewhat unexpected year and some of the key activities and achievements along the way.

Submissions are invited for this special issue on the topic of AI auditing, assurance, and certification.

Is it appropriate for autonomous vehicles to be able to detect and make decisions based on characteristics protected by anti-discrimination legislation?

.jpg)

The FAA recently set out steps for the return of the Boeing 737 Max aircraft to commercial service. Professor John McDermid looks at what went wrong and the fixes that have been put in place.

An AI Command Centre, thought to be the first of its kind in the UK, is to be the focus of a study to understand how it will impact the quality, safety and organisation of healthcare at the Bradford hospital it is part of.

AAIP is delighted to endorse the new guidelines to improve the guidelines for clinical trial protocols and clinical trial reports

A project that will improve the ability of autonomous systems to reason about the impact of their decisions and actions on technical and social requirements and rules has been awarded over £3M from the UKRI Trustworthy Autonomous Systems (TAS) programme.

40th edition of SAFECOMP to be held at the University of York with a special theme of Safe human-robotic and autonomous system (RAS) interaction

The new paper examines the modelling assumptions that have been informing the COVID-19 policy-making processes and to what extent these assumptions and their limitations are communicated to decision-makers.

Elon Musk thinks his company Tesla will have fully autonomous cars ready by the end of 2020. Challenges remain - Professor John McDermid gives us five of the key obstacles.

New report provides single point of reference on the safety, regulatory and liability issues for operating inspection robots in the EU

New AAIP report presents framework for assuring the safety of highly automated driving systems

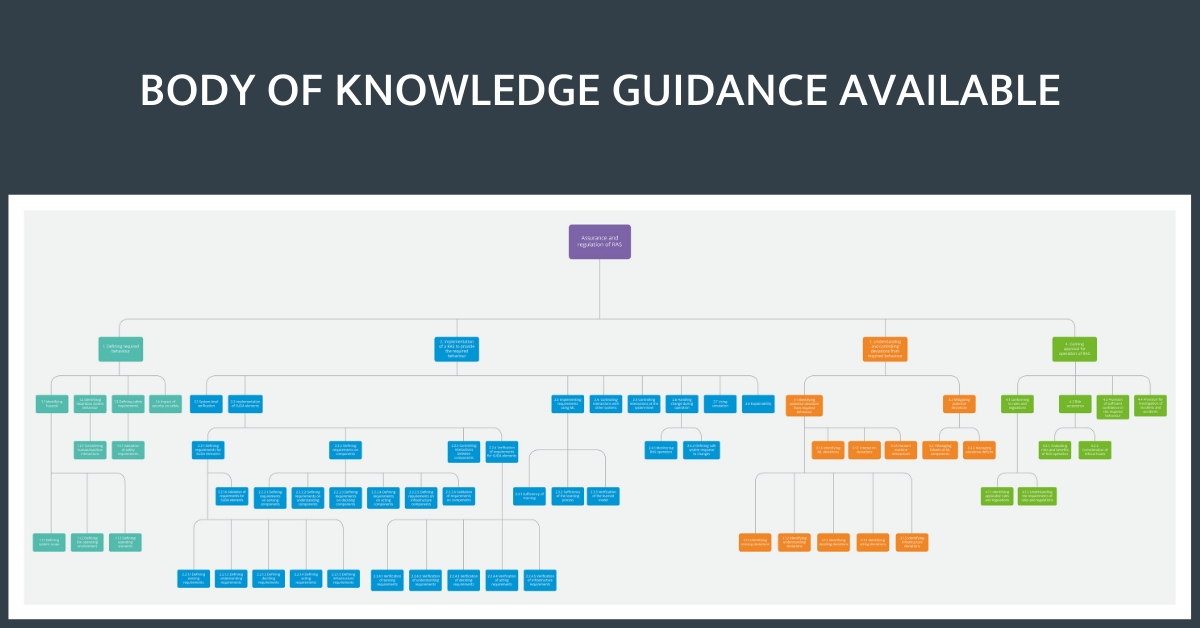

Today we are rolling out the first practical guidance that is freely accessible through the online AAIP Body of Knowledge to support the safe development of robotic and autonomous systems (RAS).

Every day across the world people depend upon complex systems for their everyday lives: healthcare, transportation, and the built environment they live and work in. These systems are evolving rapidly through developments in technology. But such progress can run ahead of the necessary changes to the structures and governance around the systems themselves that will ensure their safety.

AI-based tools in health care are challenging the standard clinical practices of assigning blame and assuring patient safety. This paper considers the possibility of patient harm caused by the decisions of an AI-based clinical tool, focusing on two aspects: moral accountability; and safety assurance.

The UK-RAS Network has announced five new strategic task groups to lead activities on key areas of interest to the Network. AAIP is delighted to be part of the Skills and Education in Robotics and Autonomous Systems group.

Professor John McDermid and Dr Ana MacIntosh talk to journalist Philippa Geering about the safety of artificial intelligence and autonomous systems

Collaboration is key to the success of the Programme and we have worked across the globe to join forces where needed. A real triumph of our partnerships is their multi-disciplinary nature: the safe introduction and adoption of robotics and autonomous systems is a complicated issue and we must work across disciplines to solve it.

Last month the Assuring Autonomy International Programme (AAIP) became the newest member of the Australian Alliance for Artificial Intelligence in Healthcare (AAAiH).

We are delighted to announce that Dr Radu Calinescu has been awarded funding from ORCA for a new verification and assurance project

Dr Radu Calinescu is a guest editor on upcoming special edition

Ana MacIntosh to present at Lloyd's Register Foundation's international safety conference in October

Nikita Johnson received the International System Safety Society's George Peters Award at their recent conference

Dr Richard Hawkins and Professor John McDermid have written a briefing paper for the Institute of Manufacturing on assuring the safety of autonomy in manufacturing

The University of York is to establish a £35m flagship research facility to address global challenges in assuring the safety of robotics and other systems that use artificial intelligence. The York Global Initiative for Safe Autonomy will be based on Campus East and will house specialist laboratories and testing facilities – bringing together industrial partners and world-leading experts in the field.

Short talks and posters invited for this first workshop on assuring the safety of physically assistive robots

The current unprecedented interest in machine learning is fuelled by a vision of its applicability extending to healthcare, transportation, defence and other domains of great societal importance. Achieving this vision requires the use of machine learning in safety-critical applications that demand levels of assurance beyond those needed for current applications.

The Assuring Autonomy International Programme, a partnership between international charity Lloyd’s Register Foundation and the University of York, is investing more than £1.2 million into research that will advance the safety assurance of robotics and autonomous systems (RAS), while building public trust in such technology.

Given the recent rapid advances of robotics and artificial intelligence, this paper addresses the associated ethical issues.

The Law Commission's announcement today that they could begin work to establish a safety assurance scheme to facilitate the deployment of highly automated driving systems is a welcome next step towards the safe introduction of autonomous vehicles.

The Secretary of State for Work and Pensions has confirmed the appointment of Professor John McDermid as a non-executive director on the Health and Safety Executive (HSE) Board.

Congratulations to Assuring Autonomy International Programme Research Associate Colin on this award

Join us for a World of Wonder at this year's York Festival of Ideas.

.jpg)

AAIP is looking for applicants from industry, academia and regulatory organisations for our visiting fellowships.

Congratulations to AAIP Research Associate Nikita on her recent award

Find out more about what we accomplished in 2018 and where we're heading now: read our 2018 annual review.

The 2019 NHS Digital Health Safety Conference at the University of York: a beautiful city, 100 great minds and a whole day dedicated to discussing the safe introduction of new AI-based digital health technologies.

Guardian angel or big brother? Professor John McDermid gives us five things to consider before speed limiters are added to cars in an article in The Conversation

The Assuring Autonomy International Programme was part of a debate organised by White Rose Brussels about how we address the challenges of increasing human-robot interaction in many areas of society.

Can we expect autonomous vehicles to be moral agents? Decisively no, says Professor John McDermid in this article for The Conversation

Professor John McDermid's article on assuring the safety of smart city initiatives for a New Statesman Spotlight on Transport

Up to £500,000 awards are available for research into assuring the safety of robotics and autonomous systems

Major industrial and academic organisations gather in York to launch new programme in robotics and autonomous systems.

Professor John McDermid, Director of the Assuring Autonomy International Programme, responds to the deal.