Blog

Our latest posts from our team of academics, researchers and partners are below.

Across all sectors, technology is increasingly advertised as a means of improving the safety and effectiveness of specific tasks and operational environments. The maritime industry is no exception. Here, Dr Kate Preston explains why we need to fully understand the complexity maritime pilotage before we can integrate technology like autonomy.

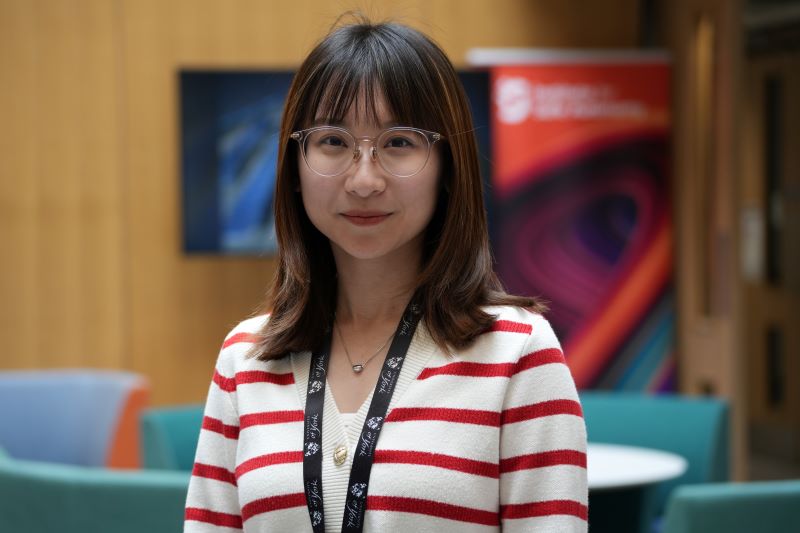

Our first CfAA staff spotlight of 2026 is Dr Tian Gan. Dr Gan joined us last year and has embedded herself in our maritime assurance work. Here, she talks about what led her to the CfAA and why maritime autonomy is an exciting and growing research area.

.jpg)

In our final staff spotlight of 2025 Director of the Centre for Assuring Autonomy, Professor John McDermid, talks about his extensive career in safety, how he came to lead the CfAA and how cross collaboration, multidisciplinary working, and a respected heritage in safety critical systems has been the key to both the AAIP's and the CfAA's success.

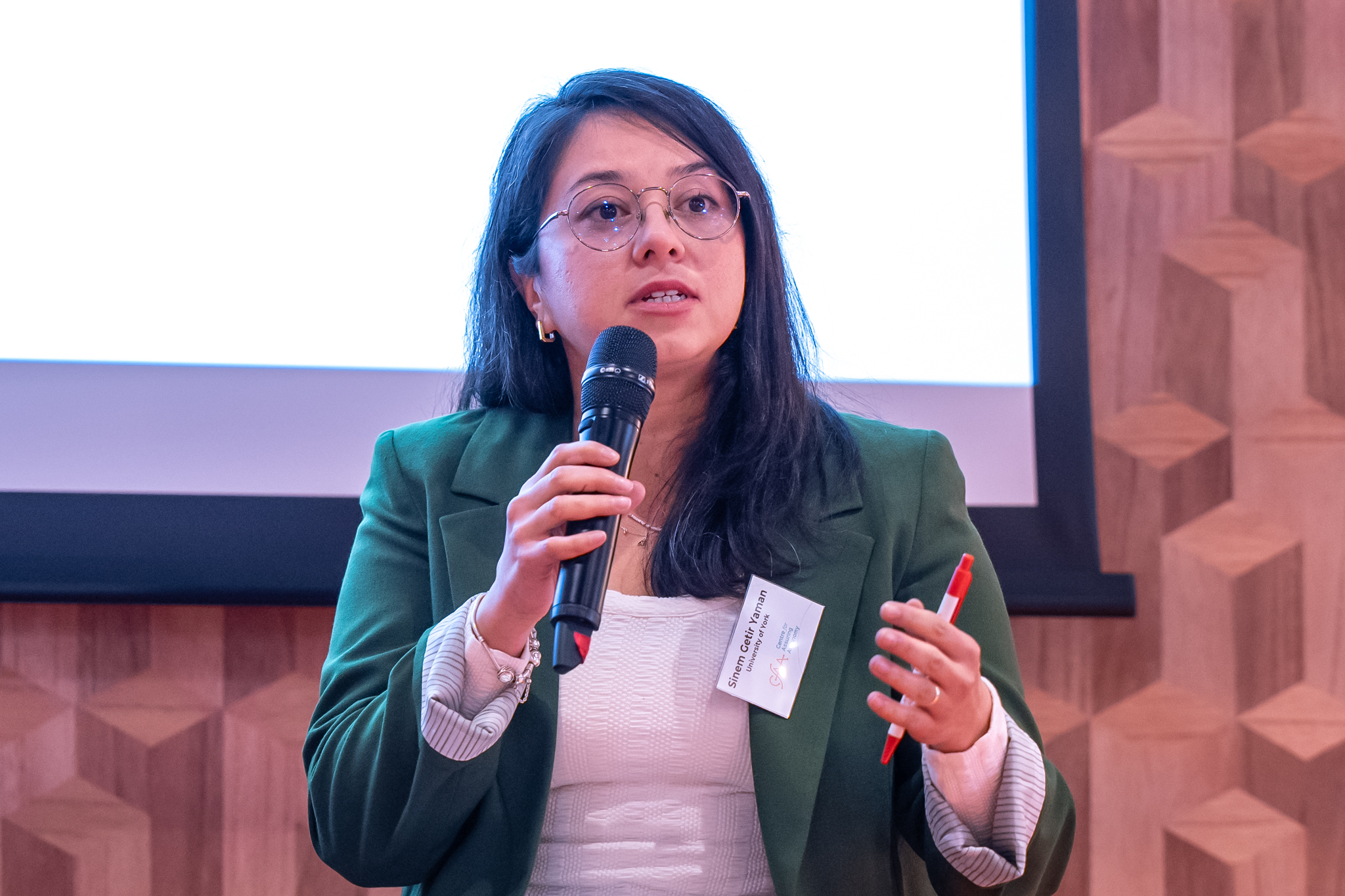

This month we're spotlighting the work of Dr Sinem Getir-Yaman, a CfAA researcher whose work in safety methodologies is helping to address the question of how autonomous systems operate safely in the real world. In this Q&A, Dr Getir-Yaman introduces her latest project focused on the safety and security of advanced AI and the need for closer industry collaboration.

What is Concept Creep and why do we need to think about it in the context of safety assurance?

Imagine a drone flying deep into a dark, dusty mine , no GPS, no sunlight, just walls, machinery, and maybe even a person standing in the corner. Now imagine you’re the person who has to make sure that drone doesn’t crash, doesn’t miss its path, and most importantly doesn’t hurt anyone. That’s where our work at the CfAA comes in as PhD student Nawshin Mannan Proma explains.

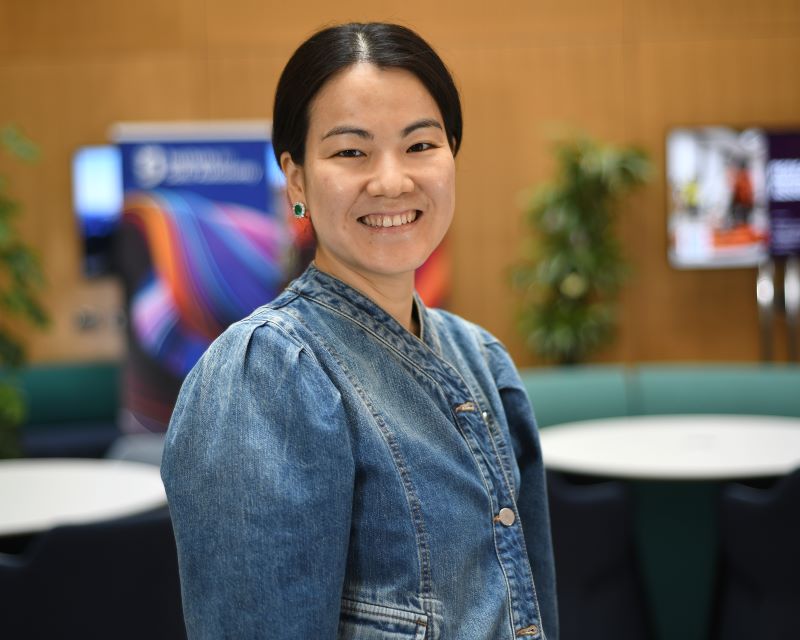

Dr Kate Preston is a Research Associate in Safe Autonomy with a specialism in human factors and ergonomics. In our latest staff spotlight Dr Preston explains how her specialism is a critical ingredient in both the development and use of AI technologies - particularly in critical areas such as healthcare.

In the first of this two part blog series, Dr Victoria Hodge and Dr Philippa Ryan explore the use of autonomous mobile robots for solar panel inspection, and discuss the importance of a dedicated robotics platform to enable reliable monitoring for expanding solar farms.

The decommissioning of nuclear facilities presents a number of growing challenges and risks. In this blog, Research Associates Dr Calum Imrie and Dr Ioannis Stefanakos review a recent project which tackled one of these key challenges.

As both our Research and Innovation Fellow and Business Development and Delivery Manager at the CfAA, Dr John Molloy is heavily involved in working with our industry partners, particularly in the maritime space. In this spotlight he shares how his understanding of engineering challenges is helping shape our approach to safety assurance.

As our Director of Strategic Programmes, Dr Ana MacIntosh is responsible for developing and overseeing a portfolio of major programmes under the umbrella of the CfAA. In this spotlight she shares how her role helps shape the current and future work of the CfAA in this prominent and fast-moving field.

Research Associate, Dr Sepeedeh Shahbeigi, works at the intersection of AI and safety, focusing on how we can bridge the gap between theoretical safety concepts and practical implementation in real-world autonomous systems.

In the last 12 months, the CfAA’s work in the maritime sector has revealed a significant shift in how the maritime industry is approaching autonomy and its confidence in its ability to adopt such technologies safely. In his latest blog, Dr John Molloy, CfAA’s Business Development and Delivery Manager explores what the next phase of adoption may look like.

Dr Yan Jia is a lecturer in AI Safety and part of the CfAA research team. In our latest staff spotlight, she explains more about her work in safety assurance and she brings together her niche background in safety-critical healthcare applications to advance this important area of research.

Dr Nathan Hughes’ work into understanding how people make decisions with technology is crucial for building upon the research into human-centred autonomy undertaken at the CfAA. Learn why their investigations into how and why humans make decisions is so important in designing safe AI systems across distinct domains.

Have you ever paused to consider the sheer number of decisions made every time we get behind the wheel? What drives these decisions? How do we adapt to diverse conditions? In this blog PhD student, Hasan Bin Firoz, explores why decision making for autonomous driving remains an unsolved challenge.

Early career researcher Dr Ioannis Stefanakos works on developing techniques/methodologies enabling the safe and effective use of autonomous and self-adaptive systems that are deployed in a range of applied settings including hospital emergency departments and domestic environments. He shares some insightful tips for PhD students and why his work provides a deep sense of fulfilment.

The latest findings from the Future of Life Institute’s review into the safety practices of six leading AI developers showed low scores across the board. Our Director, Professor John McDermid explains why this result is not unexpected and what we need to consider to enable the safe deployment of Frontier AI models.

Lloyd’s Register Foundation Senior Research Fellow Dr Ryan reflects on how her fellowship allows her to explore different safety applications, and the ever-important and evolving relationship between the safety world and the AI landscape.

Forests are increasingly important as we attempt to mitigate the effects of climate change and prevent further loss of habitat for animals and plants across the globe.

Dr Hodge is a Centre Research Fellow with an accomplished and multifaceted career. Here, she tells us how this has proven to be a valuable asset in her work, and how multidisciplinary collaboration with a broad range of people continuously provides her with fresh perspectives for both her research and ways of working.

Professor Sujan joins the Centre as Chair in Safety Science. He tells us what first inspired him to work in Human Factors and safety science, and the most important challenges for AI technologies and their use - now and in the future.

.jpg)

The integration of Artificial Intelligence (AI) into the healthcare sector affects everyone from patients and clinicians, to regulators and healthcare providers. Understanding the context and diverse risks of such an integration is critical to the long-term safety of patients and clinicians, and to the success of AI.

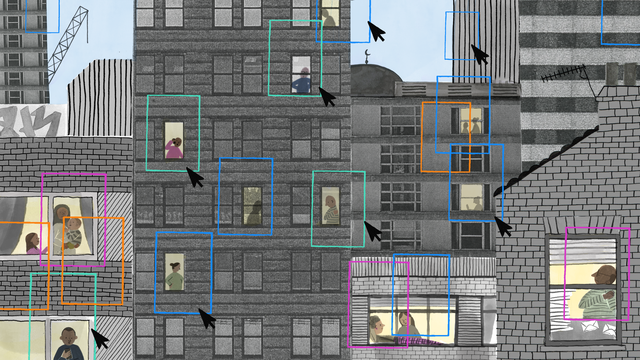

At the recent Festival of Ideas held in York our academics and researchers talked to over 120 members of the public about the current advances in AI and explored the hype and reality of AI technologies. The Festival of Ideas is a fantastic way for us to engage directly with the public and share the important research our experts do into safety assurance of AI and machine learning-enabled systems.

Our Chair of Systems Safety and Business Lead for the Centre for Assuring Autonomy shares a little on his background, his hopes for future opportunities and the top three things companies need to know about safe AI.

For early career researchers (ECR) attending conferences and seminars is a great way to network and learn about different perspectives and progress around their specific area of research. In this blog research associate Gricel Vazquez shares her experience of a recent seminar.

In the final part of his three part blog series, Research and Innovation Fellow Dr Kester Clegg discovers the problem is essentially that our system instructions are getting ignored by GPT-4.

In the second instalment of his three part blog series, Research and Innovation Fellow Dr Kester Clegg delves deeper.

In the first of this three part blog series, Research and Innovation Fellow Dr Kester Clegg explores Large Language Models’ (LLM) ability to ‘explain’ complex texts and poses the question of whether their encoded knowledge is sufficient to reason about system failures in a similar way to human analysts.

In this blog, Programme Fellow, Tarek Nakkach, looks at changes to the regulatory landscape in the Middle East since 2021 and how developments in AI technology are impacting regulation and policy.

Multidisciplinary working has always been a core pillar of the AAIP’s research efforts. In this blog Dr Jo Iacovides from the University of York and Preetam Heeramun from NATS discuss the benefits of multidisciplinary projects and how they can open up new opportunities in safety-critical domains.

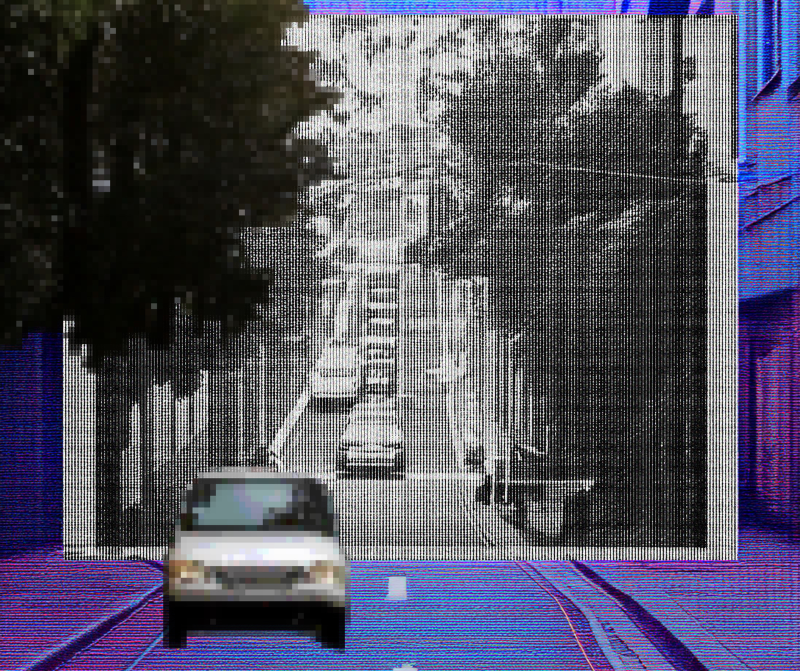

We recently welcomed Vladislav Nenchev from BMW as an AAIP Programme Fellow. His fellowship centers on pushing the boundaries of automatic verification methods for safety and reliability of Automated and Autonomous Driving Systems. In this technical blog he sets out the hurdles and possible solutions for safe autonomous driving.

In November we hosted the final presentations of our hackathon challenge alongside Oxford Robotics Institute as part of our work to safely assure the use of autonomous aerial vehicles in mines. This is the culmination of a series of events and workshops held throughout 2023 to establish how autonomous systems can assist human endeavour and reduce risk to life in challenging and dangerous environments.

.jpg)

2023 has been a big year for AI governance. Research Fellow, Dr Zoe Porter, explores three key reflections arising from these developments and what they can tell us about the future of safe AI.

Dr Richard Hawkins, Senior Lecturer in Computer Science explores how assuring the use of AI may help in the fight against wildfires.

How multidisciplinary collaboration is improving the safety of an AI-based clinical decision support system

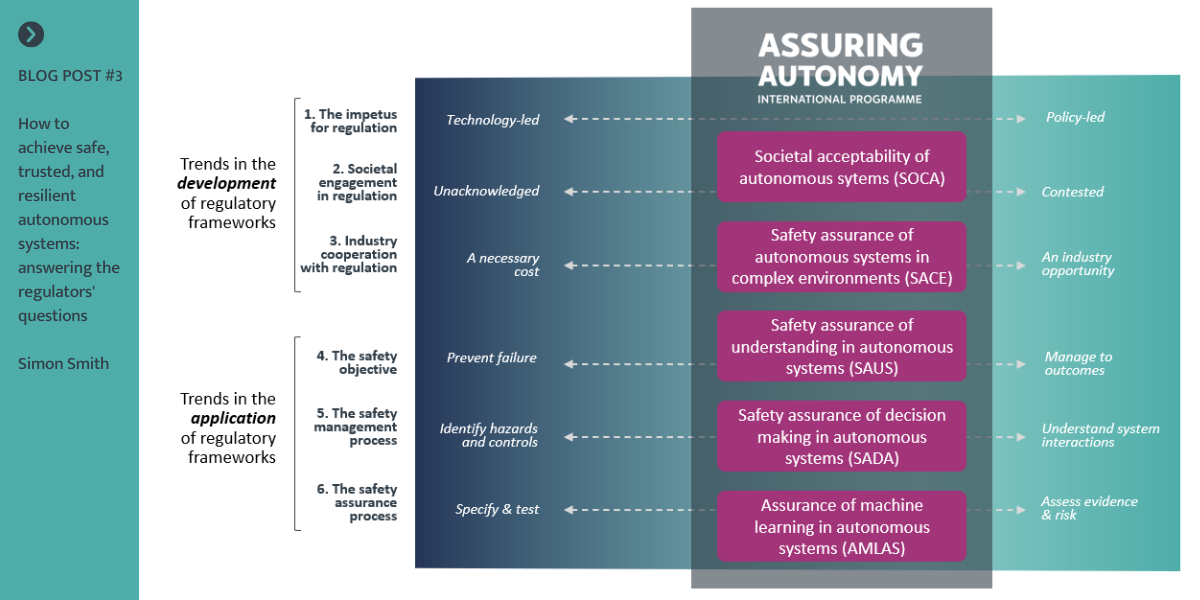

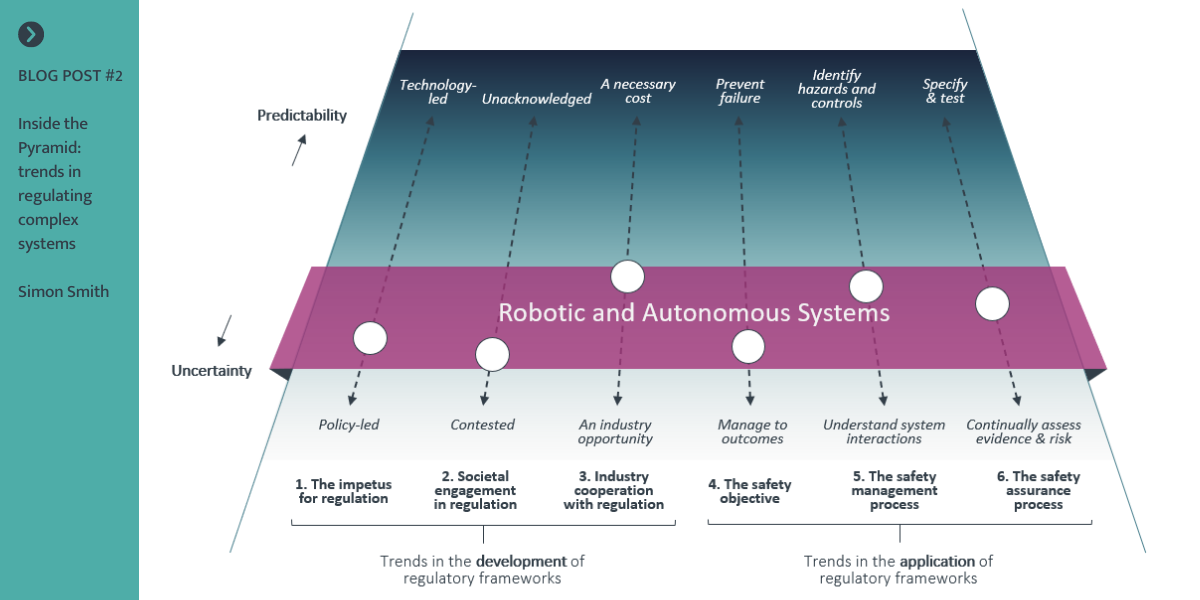

In the final of three blog posts, AAIP Fellow Simon Smith highlights some of the questions we need to consider to advance regulatory frameworks for the safe introduction of RAS and some of the research being undertaken to answer them

In the second of three blog posts, AAIP Fellow Simon Smith identifies six emerging trends and how they signpost the direction that regulation of RAS should be taking

In the first of three blog posts, AAIP Fellow Simon Smith considers how regulatory frameworks could evolve to drive innovation and enable the safety assurance of systems that continuously adapt to their environments

Author Professor Simon Burton concludes his series of blog posts by considering automated driving as a complex system, and proposing recommendations for the automotive industry to consider

Moving towards safe autonomous systems

Data that reflects the intended functionality

This is my final article in this series; a great way to start the New Year! This article, in a way, is the practical conclusion of my research and so I will discuss my recommendations for law, policy and ethics for the United Arab Emirates (UAE).

The role of human factors in the safe design and use of AI in healthcare

Products, systems and organisations are increasingly dependent on data. In today’s Data-Centric Systems (DCS) data is no longer inert and passive. Its many active roles demand that data is treated as a separate system component.

I will compare the UAE liability regime to others, in particular the European Union regime and its approach to the liability of autonomous systems.

In this second post, I discuss the remedies available to a person who has suffered harm by an autonomous system.

The main point of law is the following: Who is liable when an autonomous system causes injury or death to a person or damage to property?

.png)

The term “AI safety” means different things to different people. Alongside the general community of AI and ML researchers and engineers, there are two different research communities working on AI safety.

This is the last in a series of blog posts exploring the safety assurance of highly automated driving to accompany a new AAIP report which is free to download on the website.

This is the fifth in a series of blog posts exploring the safety assurance of highly automated driving to accompany a new AAIP report which is free to download on the website.

This is the fourth in a series of blog posts exploring the safety assurance of highly automated driving to accompany a new AAIP report which is free to download on the website.

The idea that the driver is integral to vehicle control is fundamental to the automotive functional safety risk model. So what happens when we introduce autonomy?

This is the third in a series of blog posts exploring the safety assurance of highly automated driving to accompany a new AAIP report which is free to download on the website.

This is the second in a series of blog posts exploring the safety assurance of highly automated driving to accompany a new AAIP report which is free to download on the website.

This is the first in a series of blog posts exploring the safety assurance of highly automated driving to accompany a new AAIP report which is free to download on the website.

The societal benefits of autonomous vehicles (AVs) — those that operate fully without a driver — have never been clearer than they are right now. The delivery of essential items, such as medical supplies and food, with limited human contact, would serve us well in the current Coronavirus climate. While the development of AV performance is key, we must not neglect the assurance of their safety.

Consider an intensive care unit (ICU) where clinicians are treating patients for sepsis. They must review multiple informational inputs (patient factors, disease stage, bacteria, and other influences) in their diagnosis and treatment. If the patient is treated incorrectly who do you hold responsible? The clinician? The hospital? What if an aspect of the treatment was undertaken by a system using Artificial Intelligence (AI)? Who (or what) is responsible then?

.jpg)

Boeing may never have conceived the Boeing 737 's Manoeuvring Characteristics Augmentation System as an autonomous system. In effect, however, it was autonomous: it took decisions about stall without involving the pilots.

Human control of artificial intelligence (AI) and autonomous systems (AS) is always possible — but the questions are: what form of control is possible, and how is control assured?

Safety and security are two fundamental properties for achieving trustworthy cyber physical systems (CPS). It is generally agreed that the gap between the two has become less clear, but the relationship between them is still inadequately understood.

The recent news that Uber isn’t criminally liable for the fatal crash in Tempe raises interesting questions. Read our blog post of two halves – ethical and legal.

Considering the intent of requirements in machine learning.

Does machine learning give us super-human powers when it comes to perception in autonomous driving?

Do advanced driver assistance systems (ADAS) that perform too well become dangerous by giving us a false sense of security?