Blog post: Making the case for safety. Assurance strategies for automated driving systems

Posted on Monday 15 June 2020

With the concept of “safe enough” defined (see blog post #2), what we must tend to next is how to gain societal and legal acceptance of automated driving systems by presenting evidence that this level of safety has been met.

A safety assurance case provides a structured approach to this, that explicitly lays out the safety claims and evidence in a clear manner through an explicit argument. In our case, the claim is that the system will maintain safe behaviour under all operational design domain conditions according to a tolerable level of residual risk.

Structure

Such an argument can be structured in a number of different ways, all of which may be equally valid. One common approach, which we will illustrate here, is to demonstrate that all rationally foreseeable and preventable hazards associated with the system have been identified and the risks connected with each have been minimised or their effects mitigated.

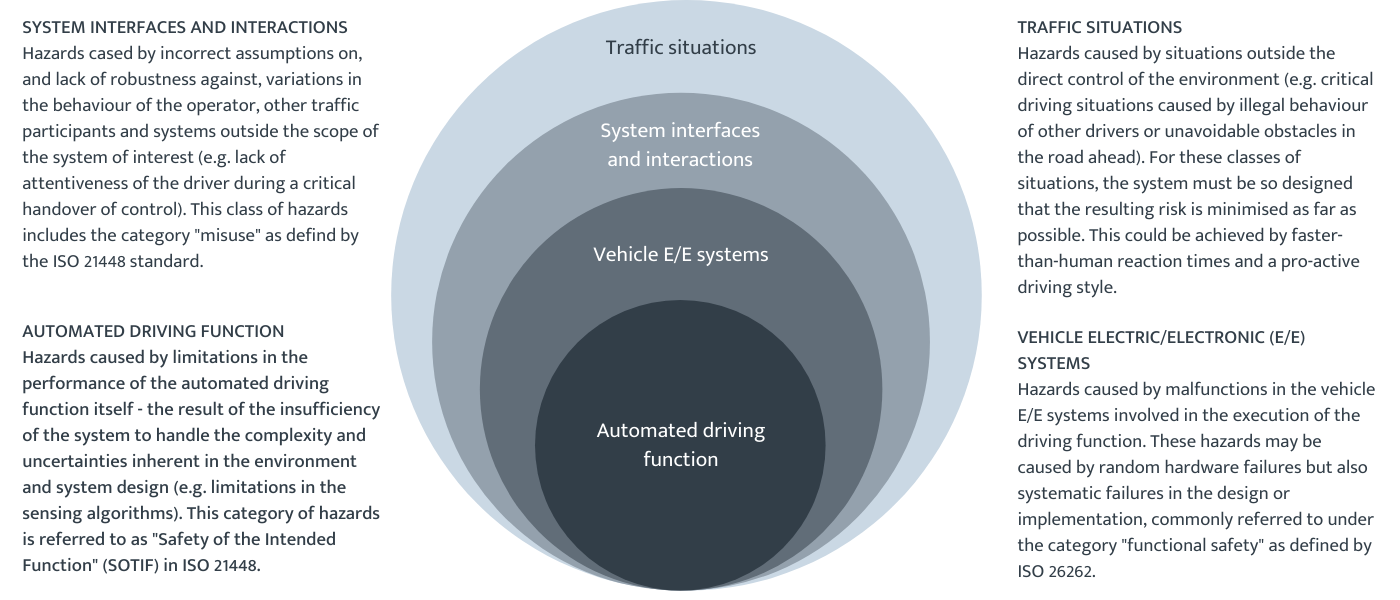

For automated driving systems, different categories of system behaviour and failures could lead to hazards, as illustrated in the layer model below. These categories form the basis for deriving more detailed safety requirements that must be fulfilled in order to reach the target level of safety.

The intersection of the layers at the bottom of the diagram highlights the fact that each of the above categories of hazard closely interacts with all others. A related source of hazards that cuts across all of these layers is the deliberate manipulation of the system. The most obvious form being cyber-security attacks, i.e. manipulation of the E/E systems or their data, potentially resulting in safety-related malfunctions of the system. However, deliberate manipulation of the physical environment can also exploit functional insufficiencies in adverse ways. For example, it has been shown how carefully placed stickers added to traffic signs can cause a camera-based perception function to misinterpret a stop sign for a 70km sign.

Mitigating hazards

For each class of hazard, a separate argument can be made demonstrating how the hazards are eliminated or their effects at least mitigated. It is important to note that the specific assurance targets, and therefore the understanding of tolerated residual risk related with each hazard may vary based on legal requirements, societally perceived risk and other factors. Additionally, the structure of the argument and types of evidence will also vary for each type of hazard.

The focus of this series of blog posts corresponds to the class of hazards associated with functional insufficiencies of the automated driving function itself — those hazards associated with the behaviour of the automated driving system (shown in the bottom circle of the layer model above). Hazards such as electric shock or explosion of the battery systems are out of scope.

Evidence

The assurance case is constructed based on the logic that the operational design domain of the system is well understood and all hazards related to the domain have been identified, that the system is inherently capable of operating safely within this context and that sufficient evidence has been collected to provide trust in the system behaviour. In detail, the assurance case will need to collect evidence to support the following claims:

- the operational design domain (ODD) is sufficiently well defined and understood, such that a detailed specification of safe behaviour can be defined for the set of scenarios considered within the ODD.

- hazards associated with the performance of the function within the ODD have been identified and detailed safety requirements derived that maintain the safe driving principles under all sets of environmental conditions.

- assurance targets for the identified hazards have been derived that are commensurate to the overall definition of acceptably safe.

- the probability of unknown critical scenarios or triggering conditions leading to hazards has been reduced through resilience built into the system design as well as validation and continuous monitoring in the field.

- the system design is inherently capable of achieving the assurance targets (safe-by-design) and the technical limitations of the system are well understood, such that, if necessary, a further restriction of the ODD could be defined in order to increase the overall safety of the system.

- the ability of the system to maintain its safety goals, even in the presence of known triggering conditions has been verified.

The following posts in this series will investigate these points in more detail, in particular discussing the type of evidence that could be presented to support the claims. Such arguments could be presented using notations such as GSN to improve understanding of the structure and provide traceability between evidence and claims. One important note to add; standards and best practice for demonstrating the safety of automated driving are still in development. Additional confidence arguments will, therefore, be required to achieve trust in the assurance case and in particular to explain how the evidence presented actively supports the claims made in the assurance case.

You can download a free introductory guide to assuring the safety of highly automated driving: essential reading for anyone working in the automotive field.

Dr Simon Burton

Director Vehicle Systems Safety

Robert Bosch GmbH

Simon is also a Programme Fellow on the Assuring Autonomy International Programme.