Blog post: Explainability — the bridge between safety and security in cyber-physical systems

Posted on Friday 30 August 2019

Safety and security are similar.

Each focuses on determining mitigation strategies for specific kinds of risks (accidental or originating from malicious attacks) by analysing potential disruptions, hazards and threats. As such, they employ similar analysis and reasoning techniques (e.g. fault trees, attack trees) for investigating failure modes, assessing their impact on the overall CPS behaviour and deriving strategies in the form of requirements or defences.

While safety and security traditionally have been considered in isolation, addressed by separate standards and regulations, and investigated by different research communities [2], they are often interdependent.

More often than not, safety and security are at odds: safety is concerned with protecting the environment from the system; security is concerned with protecting the system from the environment [3].

As such, focusing on one might be at the expense of the other [4].

For instance, safety encourages stable software versions, while security encourages frequent updates, which requires re-qualifying and recertifying the underlying CPS. Similarly, while safety implicitly embraces easy access to CPS components for fast and easy analysis, security explicitly adopts strict authentication and authorisation mechanisms as a way to increase the system’s ability to withstand malicious attacks.

A call for co-engineering

Recognising the similarities and differences between CPS security engineering and safety engineering, the research community has called for increased safety and security co-engineering [1,5,6].

Several challenges involved in co-engineering of safety and security originate in the different roles involved. Not only do safety engineers and security engineers need to understand each other, but also certification agencies must be able to comprehend the assurance cases provided by the development organisation.

Moreover, several other stakeholders, both internal and external, should understand architecture and implementation decisions related to safety and security. Examples include product managers, requirements engineers, software testers, and also to some extent, the end users (drivers) and the general public.

Explanation-aware CPS development

Aligning safety and security requires us to overcome several engineering and communication barriers. In particular, we must bridge the cognitive gap between security and safety experts.

Explainability supports this objective.

Software explainability is a quality attribute that assesses the degree to which an observer (human or machine) can understand the reasons behind a decision taken by the system [7]. Explanation must become a first-class entity during a system’s lifecycle to be able to realise explainable software that adheres to safety and security requirements.

An explanation-aware software development process should provide different models, techniques and methodologies to model, specify, reason about and implement explanations. Explanation-related artefacts produced in different stages should conform to a conceptual framework for developing other artefacts by different stakeholders, e.g. the safety cases created by a safety engineer can be based on the same explanation models and artefacts that a forensics analyst uses to build a security threat model to perform security threat analysis.

In particular, the process should be supported by strong techniques to analyse and reason about explanations to assure, for example, completeness, consistency and soundness.

Towards an explainability metamodel

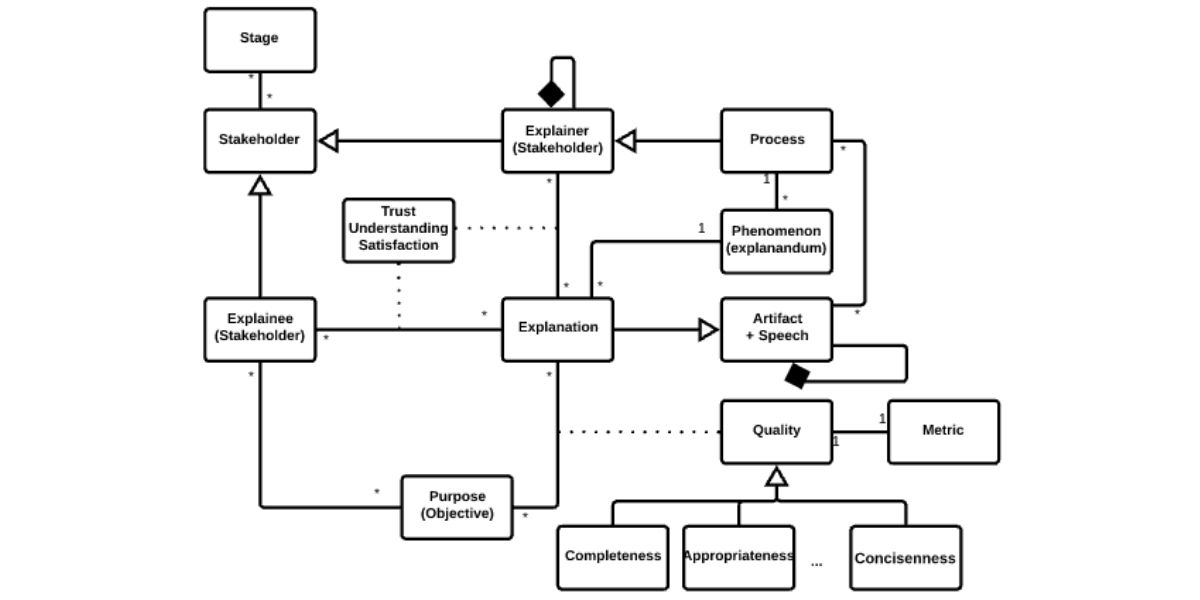

As the first step towards this goal, we employed a meta-modelling [8] approach to identify the core concepts and their relations involved in an explanation.

The core concepts of the meta-model are:

- Stakeholders — the parties involved in an explanation. In the context of safety and security co-engineering, this could be safety and security engineers. For a given explanation, each stakeholder is an explainer or an explainee. The former provides an explanation, whereas the latter receives an explanation. The explainer and the explainee are not necessarily human, but can be represented as tools or computer programs providing and creating an explanation.

- A stage corresponds to a lifecycle phase and its associated activities. For a given explanation, both the explainer and the explainee are in a particular stage. Those are not necessarily similar, but most likely different if the safety and security processes are not aligned.

- Explanations are answers to questions posed by explainees. To provide those answers, an explainer employs a process. Such a process makes use of different artifacts to come up with an explanation. Example artifacts are design documents, source code, standards etc. By having an explanation in place, an implicit relation between explainer and explainee is formed. This relation serves to increase — depending on the overall purpose of the explanation — trust or understandability. To ensure that an explanation is useful for the explainee, each explanation is associated with a quality attribute and corresponding metric to quantify this quality attribute. For example, the extent to which the explanation is appropriate for the explainee.

- Depending on both the purpose and the explainee, an explanation might have different representations (e.g. textual or graphical), and an explanation is not limited to one representation as an explanation might be presented to different explainees with different needs.

Outstanding challenges

This meta-model is the first step toward an explanation-aware CPS development process for safety and security co-engineering. However, there are a number of open questions and challenges still to be solved:

- Extending, formalising and concretising the meta-model as the first stepping-stone to realise explanation-aware software development that facilitates co-engineering of safety and security.

- Identifying various quality attributes of explanations that are concerned with safety and security in addition to proposing (formal) modelling and analysis techniques to specify, analyse and quantify explanations to assure the quality attributes.

- Providing composition, abstraction and refinement methods to compose, abstract and refine explanations to be able to guarantee scalability and traceability of explanations that enable to link explanations (of different stakeholders) in different stages to each other.

Dr Markus Borg, Senior Researcher, RISE AB, Sweden

Dr Simos Gerasimou, Lecturer in Computer Science, University of York

Dr Nico Hochgeschwender, Research Group Leader, German Aerospace Center

Dr Narges Khakpour, Senior Lecturer in Computer Science & Media Technology, Linnaeus University

Notes to editors:

This blog post has been partly driven by research carried out at the GI-Dagstuhl Seminar 19023 on Explainable Software for Cyber-Physical Systems