Challenges and remedies for developing safe automated driving functions

Posted on Wednesday 24 January 2024

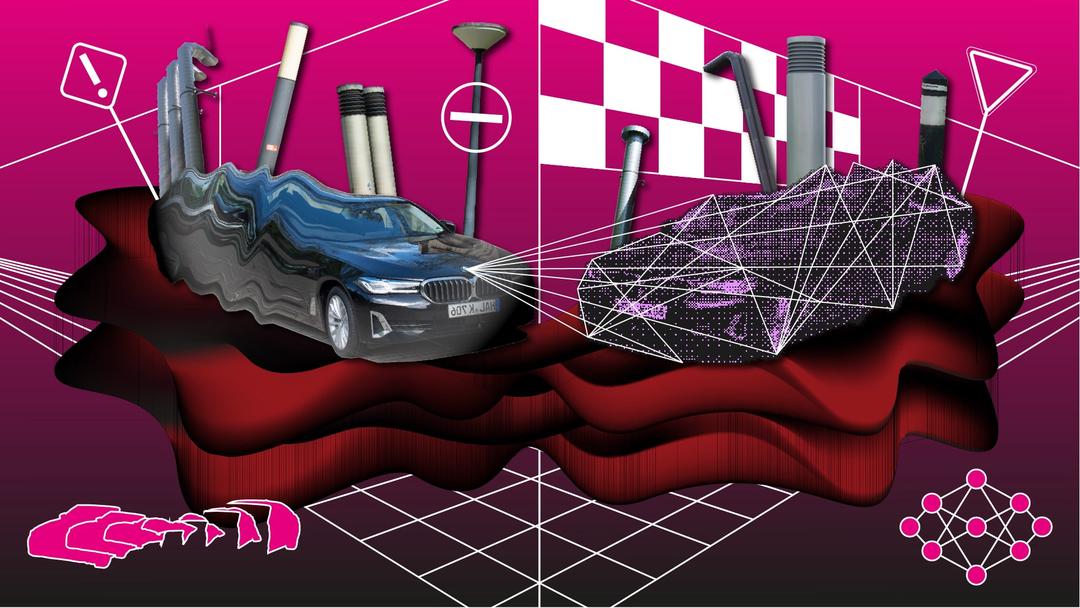

Recent advances of the Operational Design Domain (ODD) of Advanced Driver Assistance Systems (ADAS) in production include hands-off driving, automatic lane changes and highly automated driving on highways. Pushing these functional boundaries has significantly contributed to the methodology for providing safety guarantees for challenging automated driving maneuvers to comply with relevant safety standards for minimizing both expected and unexpected unsafe behaviors.

Understanding ongoing challenges

However, developing automated and autonomous driving, particularly in urban environments, still poses several challenges for pre-deployment evaluation and in-service monitoring:

- Availability: ADAS operate within complex environments which cannot be fully specified at design time. Thus, it is not possible to estimate the ODD and all possible system states to assess safe operation in advance.

- Specification: Specifying the required system behavior or defining executable pass/fail criteria under all possible conditions is very challenging, e.g., due to a strong situation-dependency and the large scenario set.

- Complexity: the complexity of the function and its technical realization over numerous components increase with an extension of its capabilities. This includes using artificial-intelligence-based methods to achieve an improved performance.

- Scalability: ADAS software (or a subset thereof) may be reused on different platforms, which may include different on-board sensors, electronic control units, and actuators, or off-board data collection, analysis, and evaluation infrastructure.

Traditional remedies

Developing a robust ADAS requires incorporating the refinement of the safety goals and requirements which the system can be evaluated against into the design process. Then, using contract-based design the system is decomposed into individual components. A contract specifies what each component expects from its operational context (assumptions) and which assurances can be made to its context (guarantees). These contracts allow compositional arguments to be made for overall system properties. For example, for following a lane, a road component should fuse relevant sensor inputs and provide the best possible estimate of the lane boundaries with uncertainty limits. Based on that, a behaviour planner provides a trajectory for the motion of the vehicle within the lane boundaries, which, e.g., does not violate lateral acceleration limits within the next few seconds. Then, a controller should compensate bounded disturbances along the trajectory and provide an input for the actuators.

A large part of contract satisfaction can be verified independently for each component by using various types of testing. This may include component testing and static code analysis to assure full code coverage and functional correctness. Additionally, falsification can be used to validate requirements or to provide guidance on control design by seeking an adversarial input either by choosing it randomly or by utilizing a model to guide the search for specification violations. Closed

loop properties can be checked by simulating the interfaces of the system within a synthetic environment. The fidelity of the simulation and representativeness of its results must be validated for the results of the simulation to be used as a significant contribution to the safety case and to justify reduced real-world vehicle testing. However, even upon using all testing options, it remains open how we can be sure that we have:

- understood the environment sufficiently to identify all relevant driving situations,

- derived a complete set of requirements for the system,

- decomposed them equivalently into contracts between the components,

- and covered them fully by suitable automated tests.

To answer this question, one often resorts to processes and abstracted, reduced or gray box models, which combine statistics with structural reasoning to argue that the remaining gaps do not compromise the safety of the system. Alternatively, there are formal methods which can guarantee that for a given model, the ADAS satisfies its specification.

Remedies with completeness guarantee

Monitoring (or runtime verification) allows checking particular system behaviors against formal criteria. Thus, it may be beneficial for discovering edge cases that had not been considered in the initial design. Monitoring can be performed online, i.e., checking the current behavior, or offline, i.e., checking a (finite set of) recorded behavior(s).

Formal verification, like monitoring, may also uncover new edge cases. It requires a suitable model for the environment and the ego vehicle, which provides a complete analysis over all possible model behaviors (and possibly uncertainties). Therefore, formal verification usage is often limited to restricted properties of certain functional components such as behaviour planners or controllers, where formal models can be often obtained and safety properties can be manually mapped to a theorem proving, model checking or a barrier certification problem. However, no general approach has been presented so far to automatically create theorems, symbolic models, or barrier certificates for a general traffic situation, ego vehicle model and software component. Therefore, applying formal verification is often limited to safety specifications for simple vehicle following, lane keeping / changing controllers, assuming a simple behavior model for other traffic participants.

A more scalable alternative can be to consider worst-case behavior models to verify safety of a behavior planner or controller, at the price of an overly conservative certification. Another verification technique used in the ADAS context is based on reachability analysis - the set of reachable states of the environment and vehicle models are computed over time, and the behavior is safe, if no reachable state enters an unsafe region. Limiting factors for applying reachability analysis are the complexity of computing reachable states and using it for verifying existing components.

Instead of proving that an existing behaviour planner or controller is safe, using controller synthesis, a controller satisfying constraints and formal requirements can be computed automatically. However, describing ADAS comfort behavior requirements formally is a very challenging endeavor and, therefore, synthesized controllers have been mostly used as safety limiters or monitors for existing controllers.

Each type of traditional testing plays an important role for the overall verification and validation strategy and is needed to gather evidence for specific system properties. Even when formal verification of the complete specification for the full chain of effects is infeasible, applying automatic verification methods with completeness guarantees for a part of the specification to individual automated driving components may greatly enhance availability in complex scenarios and provide a remedy for their growing complexity and challenging scalability.