New healthcare platform launched to bridge gap between AI research and safe clinical adoption

Posted on Wednesday 26 November 2025

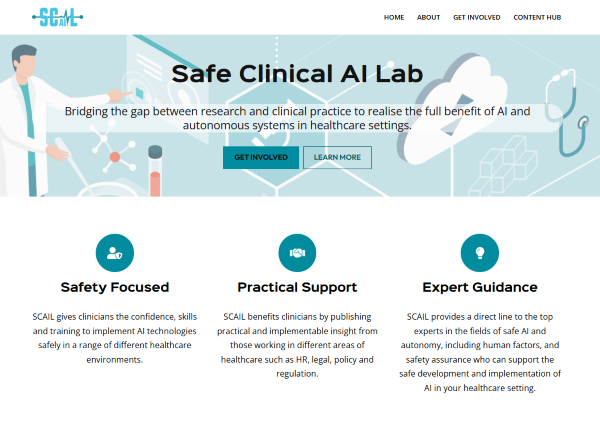

Titled Safe Clinical AI Lab (SCAIL), the site was developed in response to feedback from both academics and clinicians at the CfAA’s ‘AI Safety in the NHS Conference’ after concerns regarding the disparities between the promises of AI tools from developers and the practical and safe use of such tools in complex healthcare settings.

Developed by Professor Ibrahim Habli, Dr Yan Jia, Professor Mark Sujan and Professor Tom Lawton, the lab will also address the gap between academic research and the safe clinical adoption and rollout of AI tools in different healthcare settings.

Professor Ibrahim Habli, safety assurance lead on SCAIL said: “Healthcare is notoriously complex and the safe introduction of AI tools and technologies requires input from multiple stakeholders. SCAIL is a channel for those experienced voices, such as those from law, ethics, and patient groups, to come together and work alongside clinicians and researchers to address concerns, identify solutions and find ways to work closer together.”

The combination of skills within the team, added to the contributions from professionals across the healthcare spectrum, will make the hub an important resource as the deployment of AI tools grows across the sector.

Professor Tom Lawton, SCAIL’s clinical lead from Bradford Teaching Hospital NHS Trust added: “There is a huge opportunity from AI, but it won't fix the health system if it makes healthcare workers' jobs harder. Real safety comes from AI which supports rather than supplants the clinician - as one patient put it: "Our confidence has always been in people". SCAIL is a place where clinicians can come to see past the hype, and gain a deeper understanding of how to work together with AI tools to improve patient care, and reduce workload and costs.”

SCAIL promotes a human-centred view of healthcare AI, recognising that safety and performance depend on how people, technology and organisations work together. Rather than focusing only on the AI tool itself, SCAIL helps to focus design and evaluation on the interactions that shape real clinical work — ensuring that AI supports staff, enhances patient care and strengthens the wider health system.

Notes to editors:

For more information on SCAIL or the CfAA’s safe AI healthcare research please contact CfAA’s communication manager Sara Thornhurst on sara.thornhurst@york.ac.uk

About The Centre for Assuring Autonomy

The Centre for Assuring Autonomy (CfAA) is a £10m partnership between Lloyd’s Register Foundation and the University of York. The CfAA is an independent research and innovation centre which builds on the work of the Assuring Autonomy International Programme. The CfAA is the only such centre wholly dedicated to the safety assurance of AI, autonomous systems, and robotics anywhere in the world, working across all sectors and with partner organisations across the globe. Based in the University of York, UK, the CfAA is influential in the areas of AI regulation, software engineering, safety engineering, and software safety, supporting industry and regulators in the adoption and use of our assurance frameworks, and carrying out novel research.