Assuring AI in nuclear decommissioning

Posted on Tuesday 24 June 2025

Launched last year, the project titled Assuring the safe use of AI-enabled autonomy in nuclear Decommissioning (AID) saw Principal Investigator Dr Richard Hawkins and Research Associates Dr Calum Imrie, Dr Ioannis Stefanakos and Dr Sepeedeh Shahbeigi investigate how reinforcement learning (RL) could be safely applied to a robotic arm designed to carry out decommissioning tasks inside contaminated gloveboxes.

These sealed enclosures are commonly used in nuclear facilities to handle radioactive materials while protecting operators through the use of built-in gloves. Robotic arms, operated remotely or autonomously, offer an additional layer of safety and precision. By using AI to train the robotic arm to perform tasks independently, the team aimed to improve both operational safety and efficiency in these high-risk environments. The project was supported by funding from the Robotics and Artificial Intelligence Collaboration (RAICo), a joint initiative involving the UK Atomic Energy Authority, the Nuclear Decommissioning Authority, Sellafield Ltd, and the University of Manchester.

Safety analysis for safe deployment

To ensure the safe deployment of RL in an autonomous robotic system, a comprehensive safety analysis was conducted. This began with identifying the system’s core functions, operating modes, failure modes, and their corresponding severity classifications. A Functional Failure Analysis (FFA) was used to systematically explore potential failure conditions, the modes in which they could occur, their consequences, and associated severity levels. To complement this, a Software Hazard Operability Study (SHARD)was conducted to identify potential software-related hazards, particularly those arising from the RL controller.

These analyses informed the development of a set of Top-Level Safety Requirements (TLSRs), which define the critical safety expectations for the system during autonomous operation. These include correct object classification and placement, safe handling of objects, reliable task completion, and preservation of glovebox integrity and internal spatial arrangements. Each TLSR was linked to one or more severity classes to represent the potential impact of their violation. The analysis was based on key assumptions: objects are disentangled before manipulation, there is no dependence on manual correction or external sensing beyond the system boundary, and all safety responsibilities are fully allocated to the autonomous system. Together, the FFA and SHARD analyses provided a structured and robust foundation for deriving, validating, and managing safety requirements throughout the system’s development. The TLSRs are vital as they will guide the development process for designing and implementing safe systems. This is evident in our own case study of the RL controlled arm, as detailed later when we demonstrate how we applied an assurance methodology created by researchers within CfAA.

Simulation-to-Physical pipeline

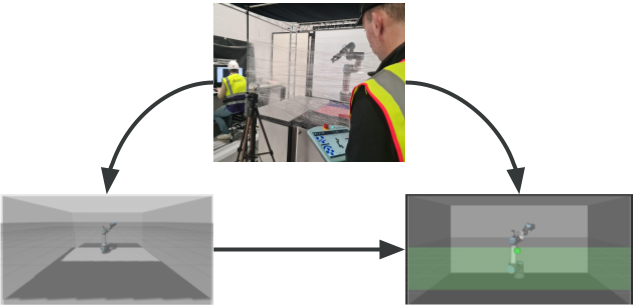

Following discussions with researchers based at the RAICo1 lab, we constructed a physical setup using available resources and equipment that approximately aligns with theirs. This consists of an industrial robotic arm (UR16e manipulator) equipped with a 2 finger gripper (OnRobot RG2), and two 3D cameras (Intel realsense). The cameras are placed at either side to maximise coverage of the “glove box” work space. Additionally, the ISA facilities have the capabilities via equipment and dedicated tech staff for creating custom parts for any experimental setup. This was very beneficial for AID’s setup; from a makeshift “glass wall” that can induce visual problems for research purposes, to 3D printing “fuel pellets”.

As an RL agent requires a large volume of observations to learn optimal behaviours, simulators are a useful tool which saves on resources such as costs and time. In this project we were able to replicate our physical setup in Gazebo, a robotics simulator, enabling us to investigate the research questions with an in-house pipeline to act as a case study. Setting such a pipeline up for this project has the additional benefit of making any future research of safe robotic manipulators easier.

Assuring RL’s safe deployment

In AID, our AMLAS methodology played a key role providing us with the opportunity to investigate how to apply AMLAS to RL components. Using our in-house pipeline, we applied AMLAS to the training and deployment of the RL controlled arm. The project provided an ideal environment for us to establish how our AMLAS methodology can be adapted for use with RL. For example, we employed a simulator for training purposes, meaning that we must provide assurance for the choice of simulator, along with the simulation setup itself. Evidence for assurance will include the accuracy of the physics engine, and the similarity of the simulated setup to the physical operational domain. We aim to perform a more comprehensive iteration of AMLAS within the coming months; stay tuned!

An engaging and impactful project

Throughout AID , we actively engaged with key figures and organisations across both nuclear and AI and robotics sectors, which was central to the impact and success of the project. Demonstrations of the AID testbed were delivered to a wide range of visitors to the Institute for Safe Autonomy (ISA), including senior government and academic representatives such as Government Chief Scientific Adviser, Dame Angela McLean, and DSIT Chief Scientific Adviser, Professor Chris Johnson. The project was also showcased at several high-profile events, including the RAICo showcase, where it was featured alongside other cutting-edge developments in robotics and AI in the nuclear sector. A general demonstration of the testbed was provided to industry leaders, and policymakers, fostering wider awareness and discussion around the safe use of AI in nuclear decommissioning.

In addition to hosting visitors, we presented the project and delivered live demonstrations at both the Safety-Critical Systems Symposium (SSS’25) and an Advanced Research and Invention Agency (ARIA) event held at ISA. These sessions offered opportunities both to communicate the project’s outcomes and potential applications and gather feedback from a broad audience spanning academia, industry, and government. A poster summarising the project’s objectives and key findings was also made available to attendees, further supporting outreach and knowledge-sharing efforts.

We were also invited to present at the Nuclear Decommissioning Authority (NDA) Robotics and AI Centre Expertise Forum that took place on the 17th of June. We disseminated the work produced from the AID project , which generated useful discussions with those in attendance. For example, when asked by an audience member about ‘destructive testing’, i.e. purposefully conducting tests on when systems fail, Dr Imrie, who was presenting, stated that he finds these tests invaluable as the data they provide helps us rigorously construct assurance arguments to demonstrate our understanding of the system’s behaviour. Fundamentally, a lack of testing could be even more costly in the long term as it could lead to large investment with little to no return.

Additionally we organised a dedicated workshop, held at ISA, to showcase AID and also foster collaborative discussions around the identified priority challenges and topics. These discussions, held with attendees from academia, industry and regulators, generated valuable input, highlighting shared challenges across the sector and suggesting potential approaches to address them.

Perhaps most significantly, the workshop underscored the strong commitment across the nuclear sector to ensuring the safe integration of AI and autonomous systems in safety-critical applications. Attendees demonstrated a clear willingness to collaborate, with broad consensus that progress in this area will require coordinated, cross-sector efforts. There was strong interest in continuing this momentum through future workshops to maintain dialogue, share new findings, and explore opportunities for end-to-end demonstrator projects. We aim to maintain this momentum by establishing connections with stakeholders, and further disseminating our work within the nuclear domain. Participants represented a wide range of organisations from academia and industry, and you can read more about the workshop in our report. We also want to thank Dr Erwin Lopez from RAICo1 and Dr Andrew White from ONR for providing thought provoking presentations at the workshop.

Download the Safety Assurance of Nuclear Decommissioning Poster (PDF ![]() , 4,247kb)

, 4,247kb)

Download the RAICo CfAA workshop report (PDF ![]() , 2,879kb)

, 2,879kb)