Increased collaboration between humans and robots is a major opportunity for industrial manufacturing. Yet it raises complex safety issues, which are a critical barrier to adoption.

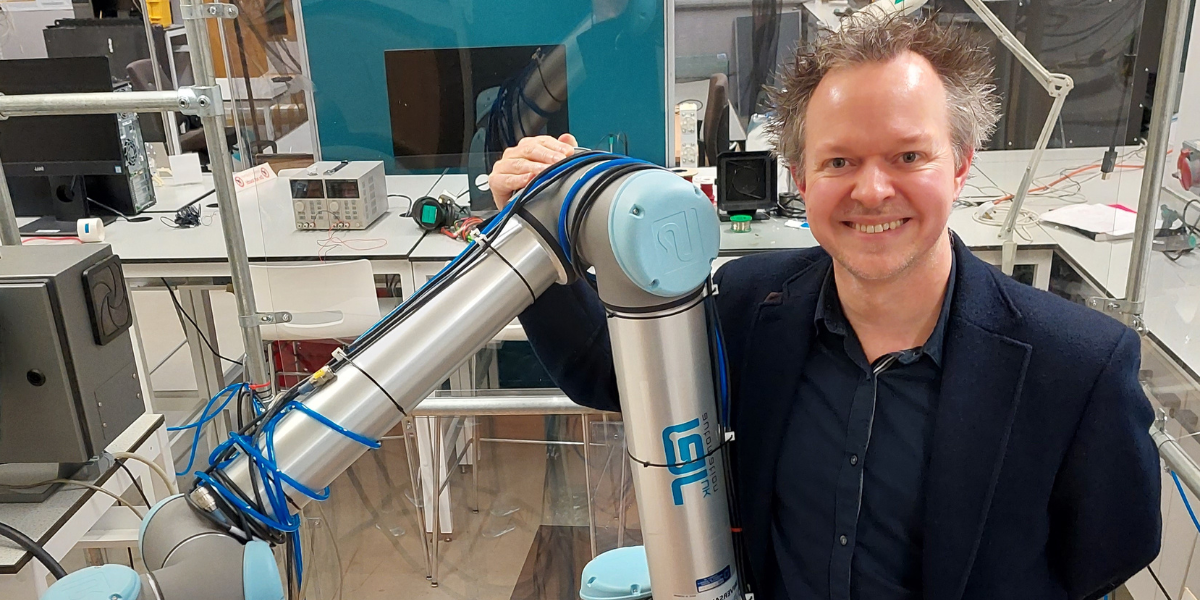

Dr James Law is a roboticist and the Director of Innovation and Knowledge Exchange at Sheffield Robotics. He led the CSI:Cobot demonstrator project.

Before the project, industry were interested but did not know how to get robots working safely alongside humans. As a result, robots were placed in safety cages and with that, the potential collaborative benefits were lost. The CSI:Cobot project paved the way for the removal of some of these safety barriers.

James and the Sheffield team recognised that by involving the relevant stakeholders at an early stage they were building that critical trust and confidence. Without it, there would likely be pushbacks in implementing these novel approaches. Ultimately securing the trust of the workforce will be critical and this has been an integral part of the work.