Safe AI and autonomous systems guidance

Safe AI and autonomous systems methodologies

In addition to being developed in partnership with industry, our ready-to-use methodologies have been validated and peer-reviewed. Both AMLAS and SACE are being actively used and recommended by organisations across multiple domains, regulators and Government to produce safety assurance cases for both AI and AS. You can also find examples of using both AMLAS and SACE in our Body of Knowledge.

Access and download Assurance of Machine Learning for use in Autonomous Systems (AMLAS) and Safety Assurance of autonomous systems in Complex Environments (SACE) below.

The BIG Argument

Our BIG Argument sets out a way to bring the entire safety case argument together in one comprehensive approach. It brings together our existing methodologies and includes the development of frontier AI technologies.

- Learn more about our BIG Argument for AI Safety cases

- Download the BIG Argument paper

- Download our BIG Argument factsheet: BIG Argument guidance downloadable brochure (PDF

, 603kb)

, 603kb)

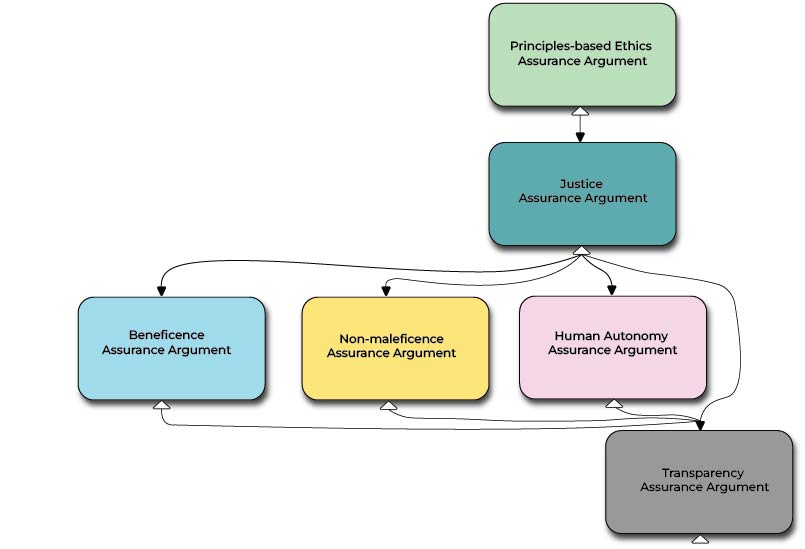

PRAISE

PRAISE is our ethical framework to structure a principles-based ethics assurance argument which engineers, developers, operators, or regulators can use to justify, communicate, or challenge a claim about the overall ethical acceptability of the use of AI in a given socio-technical context.

- Learn more about PRAISE

- Download our paper on PRAISE

- View our PRAISE case study

- Download our PRAISE Factsheet: PRAISE guidance downloadable brochure (PDF

, 749kb)

, 749kb)

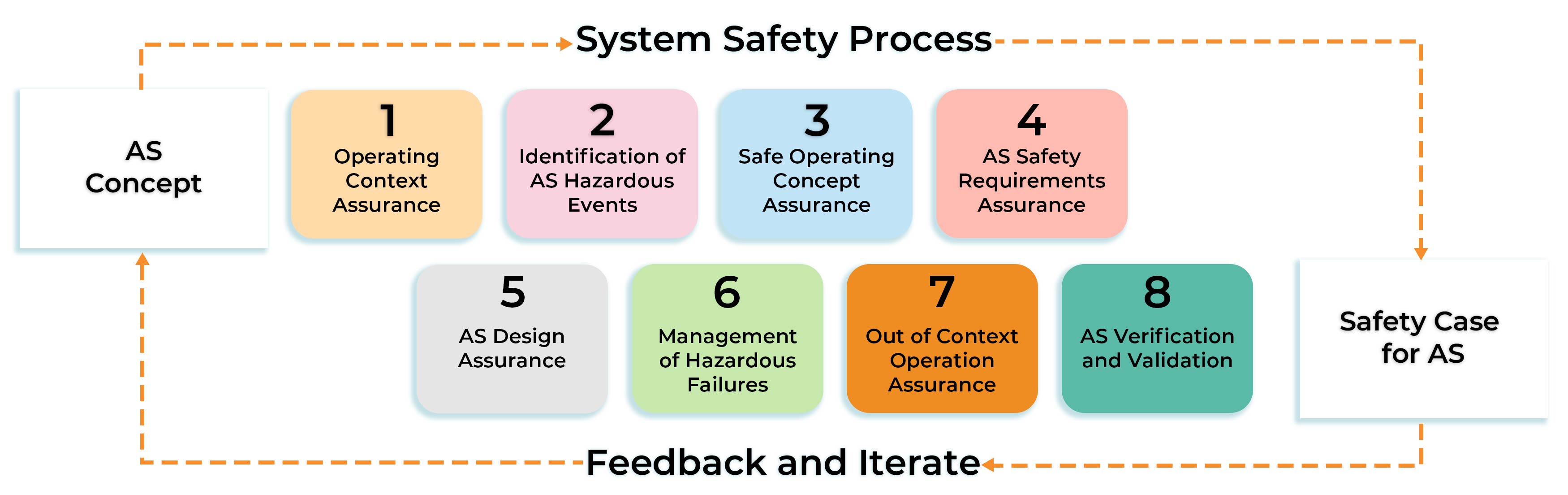

SACE

SACE is free-to-use methodology which enables the creation of a safety case for overall system-level assurance activities. You can use and access SACE in the following ways:

New to SACE? Learn more about how we developed it or read our factsheet:

SACE guidance downloadable brochure (PDF ![]() , 819kb)

, 819kb)

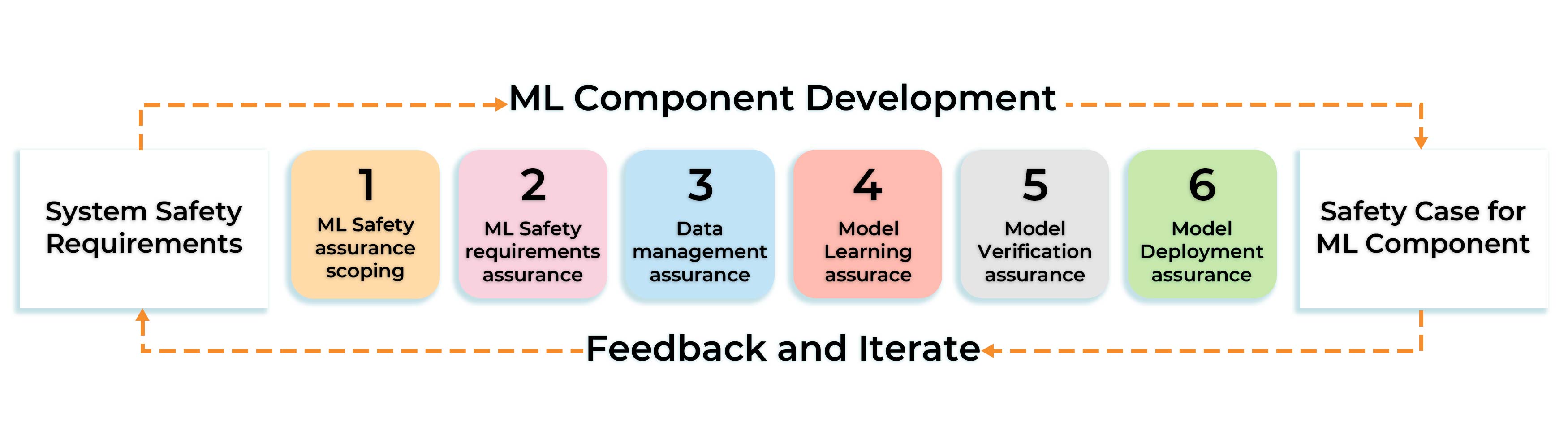

AMLAS

AMLAS is free-to-use methodology which enables the creation of a safety case for machine learning component. There are multiple ways to access AMLAS depending on what you need:

- An AMLAS downloadable PDF

- An interactive version of AMLAS

New to AMLAS? Download our factsheet: AMLAS guidance downloadable brochure (PDF ![]() , 750kb)

, 750kb)

INSYTE

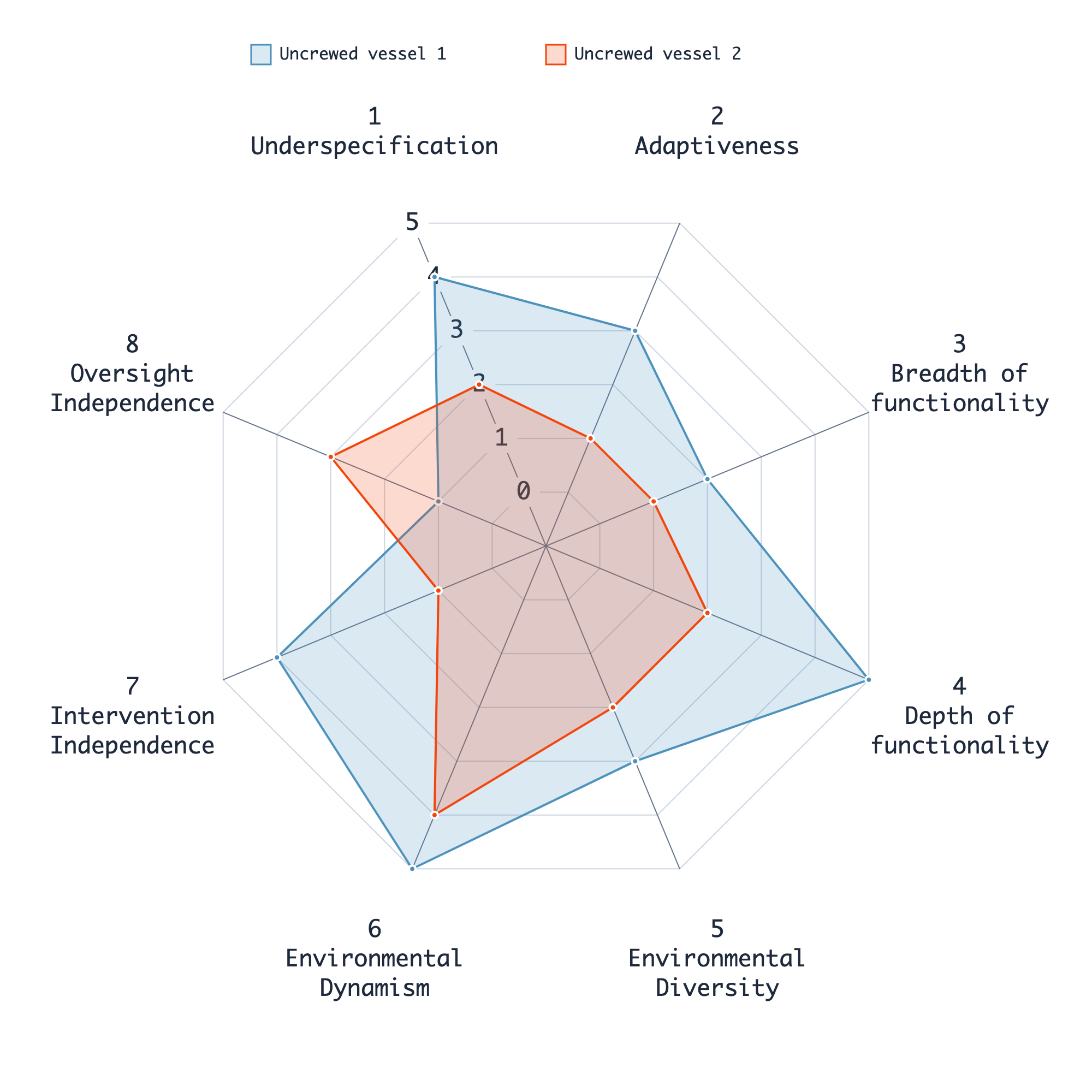

INSYTE is our classification framework for traditional to agentic AI systems, designed to support cross-stakeholder communication, to inform design, development and deployment decisions, to facilitate safety engineering and assurance, to provide a classification system for regulatory and/or certification purposes, and to help inform decisions about liability.

- Learn more about INSYTE

- Use the free, online INSYTE tool to classify your AI system