Foundational research pillars

The research we are doing in York is focused on core technical issues arising from the use of robotics and autonomous systems in critical applications.

We have been advancing our research on some of the core technical issues that remain for the safety assurance of RAS and have established a new structure to focus our work. This includes five key pillars of research:

Contact us

Centre for Assuring Autonomy

assuring-autonomy@york.ac.uk

+44 (0)1904 325345

Institute for Safe Autonomy, University of York, Deramore Lane, York YO10 5GH

Lead researchers - Richard Hawkins and Ibrahim Habli

View AMLAS on our guidance website (external site)

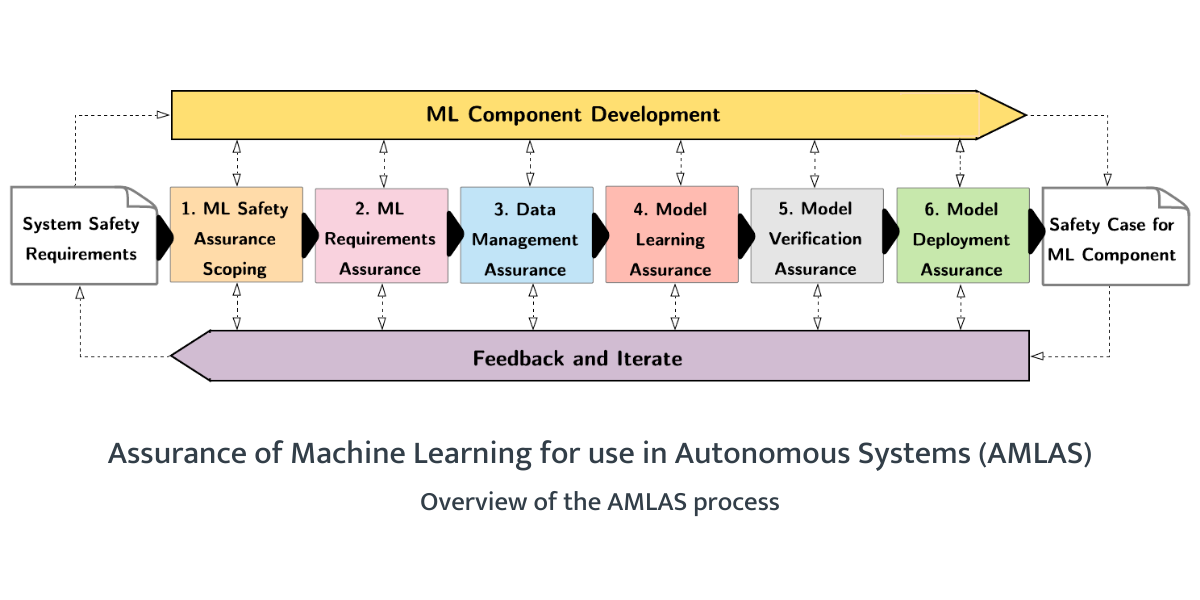

The first published guidance from our research pillars is our AMLAS methodology (Assurance of Machine Learning for use in Autonomous Systems). This has been peer-reviewed by our Fellows and experienced engineers from multiple industry domains.

“AMLAS provides us with a framework to integrate safety assurance of neural networks into our development process and build a compelling argument for our safety case.” Dr Matthew Carr, Co-Founder and Chief Executive Officer, Luffy AI

AMLAS comprises:

- a set of assurance activities that integrate with the development of ML components

- defined assurance artefacts relating to those activities

- safety case patterns to guide the development of a compelling safety case for ML components

The integration of activities, artefacts, and safety case patterns ensures AMLAS provides a practical and coherent approach. The guidance provides practical notes and examples, creating a complete handbook for safety engineers, developers, and regulators.

AMLAS is already used by colleagues across the globe to support their work to assure the safety of their (ML) components. The methodology is being further evaluated through the development of case studies in various domains, including healthcare, space, manufacturing and automotive. In a recent paper in BMJ Health and Care Informatics published as part of the AI Clinician demonstrator project, the team applied the principles of the AMLAS methodology to the AI Clinician, which informs the treatment of sepsis.

Safety assurance case for an ML wildfire alert system

The team from the ACTIONS demonstrator has created the first fully developed safety case for an ML component containing explicit argument and evidence as to the safety of the machine learning. Read the paper:

- Hawkins, R., Picardi, C., Donnell, L., and Ireland, M. "Creating a safety assurance case for an ML satellite-based wildfire detection and alert system". On Arxiv (2022).

Lead researchers: Richard Hawkins and Mike Parsons

.png)

The focus of SACE is on the overall system-level assurance activities, particularly considering the interactions of the autonomous system with the complex environment. Similar to AMLAS, this guidance defines a safety assurance process along with corresponding safety case patterns. SACE defines a safety process along with corresponding safety case patterns, leading to the creation of a compelling safety case for the system.

Lead researchers - John McDermid and John Molloy

The focus for SAUS is split into two principal areas of research. Firstly, is work to explain the nature of failures in the understanding component of autonomous systems, reflecting the sense-understand-decide-act (SUDA) model. This work is examining failures that exist owing to the technical and theoretical limitations of sensors and the ML algorithms, as well as those that have been observed through observation and analysis of deployed systems.

The second step is developing an adaptation of the Hazard and Operability Analysis (HAZOP) process to the safety assurance of the understanding component informed by the theoretical and observed failure modes. Confidence in this approach is found in the observation of the hypothecated failure modes in reports relating to deployed systems (e.g. fleets of road vehicles) as they become more widespread.

This work is closely aligned with the safety assurance work on a team of robots that will be deployed in the Institute for Safe Autonomy building and will use the robots’ perception suites as a case study.

Lead researcher - Radu Calinescu

This pillar covers the elicitation and validation of safety requirements for decision-making (e.g. path planning) in autonomous systems, failure analysis and propagation for decision-making, verification of decision-making (e.g. path planning), and safety case for decision-making in autonomous systems.

Two main research strands are being explored. Firstly, the development of a process for the analysis and assurance of autonomous decision-making that builds on existing safety engineering techniques. The second research theme is focused on autonomous systems that use deep neural network (DNN) classifiers for the perception step of their decision-making. The work is developing a new method for the correct-by-construction synthesis of discrete-event controllers for these systems.

Research papers

- Douthwaite, J.A., Lesage, B., Gleirscher, M., Calinescu, R., Aitken, J.M., Alexander, R. and Law, J., 2021. "A modular digital twinning framework for safety assurance of collaborative robotics" in Frontiers in Robotics and AI, 8, 2021.

- Gleirscher, M., Calinescu, R., and Woodcock, J. "RiskStructures: A design algebra for risk-aware machines" in Formal Aspects of Computing, 2021.

- Gleirscher, M., Johnson, N., Karachristou, P., Calinescu, R., Law, J., and Clark, J. "Challenges in the safety-security co-assurance of collaborative industrial robots", in The 21st Century Industrial Robot: When Tools Become Collaborators pp 191-214 (Springer).

- Gautam, V., Gheraibia, Y., Alexander, R, and Hawkins, R. "Runtime decision making under uncertainty in autonomous vehicles". Proceedings of the Workshop on Artificial Intelligence Safety (SafeAI 2021).

Lead researchers - Ibrahim Habli and Zoë Porter

This pillar will cover legal acceptance, regulatory compliance, accounting for ethical considerations, risk acceptance, and public trust.

Research has so far followed two main strands. First, the development of a methodology for including ethical principles in the development and assurance of autonomous systems. A workshop held in January 2021 helped to shape the direction of this work. The event, ‘From Ethical Principles to the Ethically-Informed Engineering of Autonomous Systems’, brought together members from the engineering, ethics and regulation communities. Second, the expansion of our interdisciplinary research on responsibility. This has led to an ambitious project, funded by the UKRI’s Trustworthy Autonomous Systems Programme and commencing in January 2022, to develop a framework for tracing and allocating responsibility for autonomous systems (link to responsibility project). This project brings together engineers, lawyers, philosophers, developers and the public to address one of the most important unanswered questions about autonomous systems, and a condition of their societal acceptability: who is responsible for the decisions and outcomes of autonomous systems?

Research papers

- Porter, Z., Habli, I., and McDermid, J. "A principle-based ethical assurance argument for AI and autonomous systems" available on Arxiv (March 2022).

- McDermid, J., Porter, Z., and Jia, Y. “Consumerism, contradictions, counterfactuals (PDF

, 914kb)” for Safety-Critical Systems Symposium, 2021

, 914kb)” for Safety-Critical Systems Symposium, 2021 - Porter, Z. “From ethical principles to the ethically-informed engineering of autonomous systems: workshop report”, February 2021

- McDermid, J., Jia, Y., Porter, Z., and Habli, I. "Artificial intelligence explainability: the technical and ethical dimensions" in Philosophical Transactions of the Royal Society A, August 2021.

Contact us

Centre for Assuring Autonomy

assuring-autonomy@york.ac.uk

+44 (0)1904 325345

Institute for Safe Autonomy, University of York, Deramore Lane, York YO10 5GH