Disclaimer: This project is now finished and this news section is no longer being updated. For the latest AudioLab news, please go to the AudioLab website.

20.6.18: SADIE II - A Brand New Collection of Binaural Data

Following the success of the origional SADIE database extensive research efforts have been put into developing a second state of the art collection of binaural measurments with supporting anthropomorphic data. This time, measurements were taken under both anechoic (HRTFs) and non-anechoic (BRIRs) conditions.

In addition, Headphone impulse responses and inverse equalisation filters for the open back beyerdynamic DT990s are also provided. Anthropomorphic data of human subjects is available in the form of 3D head scans and Hi-Res scale images of each ear. The number of HRTFs measured per subject has also dramatically increased, now boasting some of the highest resolution data sets available at this time. Either 2114 or 2818 unique measurements are available per human with 8802 measurements available for the dummy heads. A new set of compatable Ambix decoders have also been released. Follow the link to browse the new range of data and try out some of the decoders!

In addition, Headphone impulse responses and inverse equalisation filters for the open back beyerdynamic DT990s are also provided. Anthropomorphic data of human subjects is available in the form of 3D head scans and Hi-Res scale images of each ear. The number of HRTFs measured per subject has also dramatically increased, now boasting some of the highest resolution data sets available at this time. Either 2114 or 2818 unique measurements are available per human with 8802 measurements available for the dummy heads. A new set of compatable Ambix decoders have also been released. Follow the link to browse the new range of data and try out some of the decoders!

20.4.17: Google adopt SADIE filters for VR pipeline

We are proud to announce that the SADIE KU100 HRTF dataset is now integrated into the Youtube 360/ Google VR pipeline. We have been collaborating with Google for several months exploring the benefits of switching from Google's THRIVE HRTF set to the SADIE virtual loudspeaker database. Google VR Audio enables Ambisonic spatial audio playback in Google VR SDK, YouTube 360/VR, Jump Inspector and Omnitone. Consumers of content for these platforms can now hear improved spatial audio quality through use of the SADIE filters. To find out more about how Google have utilised that database and to download the filters follow the link to our SADIE for Google VR page.

23.9.16: Interactive Audio Systems Symposium, University of York

The University of York hosted a one-day symposium dedicated to the topic of interactive audio systems on September 23rd, 2016. The symposium explored the perceptual, signal processing and creative challenges and opportunities arising from audio systems affected through enhanced human-computer interaction. It consisted of original paper and poster sessions as well as invited presentations from key industry figures and demonstrations of emerging interactive audio technologies. Click here to have a look at the proceedings and find out more about what happened on the day.

20.9.16: Immersed in the UK, Digital Catapult London

By February 2016 investment in virtual reality and other immersive technologies had already exceeded $1.1bn, and this is an industry that looks unlikely to slow down anytime soon. A fair share of investment in immersive technologies has been down to entertainment and marketing, but recent advances such as Dr Shafi Ahmed, surgeon and Co-founder of Medical Realities broadcasting the first ever VR surgery prove that the technology is broadening its horizons quickly. SMEs, academics and investors working in immersive technologies attend this event held at the Digital Catapult in London. The event was a fantastic opportunity to find out what the UK’s leading academic and research organisations and businesses are doing in this space. Attendees gained exclusive insight into market opportunities, challenges and exciting advances. Dr. Gavin Kearney showcased research from the SADIE project amongst other audio research work from the University of York. Panelists included:

Phil Channock, Marketing Manager, Draw and Code

Steve Dann, Co-founder of Medical Realities

Dave Haynes, Investor, Seedcamp

Humphrey Hardwicke, Creative Director, Luminous Group

Gavin Kearney, Lecturer in Audio & Music Technology, University of York

Eammon O’Neill, Co-Director of CAMERA and Head of the Department of Computer Science at the University of Bath

Mark Sandler, Director of Centre for Digital Music, Queen Mary University of London

Mark Skilton, Professor of Practice, Warwick Business School

David Swapp, Immersive VR Lab Manager, University College London

20.9.16: BBC Parliament Interview York VR Researchers, Westminister, London

Dr. Gavin Kearney, Dr. Damian Murphy and Dr. Alex Southern attended the St. Stephen's Chapel Westminster Project conference to demonstrate some of the VR aspects to digital heritage research undertaken at the University of York. The conference presented the results of a three-year project to explore the history of a building at the heart of the political life of the UK for over 700 years. The AudioLab team presented to attendees the VR recordings they made in the chapel of the Westminster Choir which utilised immersive 360 visuals via an Oculus Rift and SADIE binaural rendering. Later the team were interviewed by BBC Parliament about thier research and how it effects our perception of St. Stephen's Chapel.

4.7.16: Audio for Virtual Reality at 140th Audio Engineering Society Convention, June 4th-7th, 2016, Paris

Gavin Kearney, AudioLab, Department of Electronics, University of York

Marcin Gorzel and Jamieson Brettle, Google Inc.

Pedro Corvo and Jelle Van Mourik, Playstation VR, Sony Computer Entertainment Europe Ltd.

Following the success of the workshop on Virtual Reality Spatial Audio at the Audio Engineering Society Audio for Games conference in February, the AES presented another workshop/ panel discussion on the topic at the AES covention in Paris in June. Dr. Gavin Kearney again lead a panel of industry experts to discuss current trends, practical workflows and spatial design tools for spatial audio in virtual reality systems. Participants at the event had the opportunity to listen to binaural demonstratons of audio for VR directly through 100 sets of headphones distributed throughout the audience. The workshop was one of many sessions that showcased cutting edge research and practical applications at the convention. The four-day technical program brought the opportunity to network with and learn from leading audio industry luminaries. The AES 140th Convention took place at Paris’ Palais des Congrès between the 4th and 7th of June.

16.6.16: You are Surrounded! - Surround Sound Past, Present and Future, University of York

Surround sound is a way to create immersive audio experiences through placing multiple loudspeakers around an audience, in the cinema, theatre or at home. The development of surround sound as we know it has had a rich and varied history but has largely focused on increasing the number of loudspeakers around the audience for a more immersive experience. But can this strategy realistically be employed in the home? Gavin Kearney of the University of York demonstrated how surround sound experiences have evolved from 16th Century Venetian choral performances to the large scale cinematic installations we hear today. The lecture took place at the Department of Theatre, Film and Television at the University of York as part of the York Festival of Ideas.

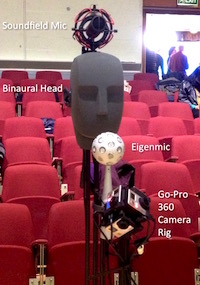

16.4.16: VR Capture of World Voice Day, York

The Department of Music and Dr Helena Daffern of York Audio Lab hosted a unique event for World Voice Day, showcasing ongoing collaborative research between the Universities of Leeds, Sheffield and York. Over 150 people turned out for 'Mastering Ensemble Singing – the Science and the Art' in the Sir Jack Lyons Concert Hall' on 16 April 2016. Expert vocal coaches Robert Hollingworth (Reader in Music at York and director of the acclaimed vocal ensemble I Fagiolin) and Rob Barber (internationally acclaimed specialist in barbershop performance) coached two quartets of singers: a classical ensemble of York University students and the local Barbershop Quartet, Delekaté. Researchers on the SADIE project took the opportunity to capture the performances for Virtual Reality for use in their project research. Dr. Gavin Kearney led the AudioLab researchers in capturing the performances in First and Higher Order Ambisonics, binaurally using a Neumann KU100 as well as the direct microphones on the performers. Visuals were captured using a Go-Pro OMNI rig. At the same time, the research team of the White Rose College of Arts and Humanities project 'Expressive Nonverbal Communication in Ensemble Performance' set up a range of audio and motion capture recording methods, measuring changes in the performers' individual and group sounds, their expressive body movements and their perceptions of their ensemble development through the session. The audience also provided valuable data on their own perceptions of the developing performances, using 'clickers'. The aim was to provide the public with insights into how researchers are developing both artistic and scientific understandings of how expert ensemble singing develops.

6.4.16: SADIE Project showcased at launch of the Digital Creativity Labs

Wednesday 6th April saw the launch of the Digital Creativity Labs (DC Labs), a major (£18 million) investment by three UK research councils, four universities, and over 80 collaborative partner organisations to create a world centre of excellence for impact-driven research, focusing on digital games, interactive media and the rich space where they converge. The main DC Labs site is York, with “spoke” sites at the Cass Business School, Goldsmiths (University of London) and Falmouth University. Participants at the launch event had a chance to learn more about the exciting activities of the Digital Creativity Labs and its partner institutions. Amongst the demonstrations and 'buzz talks' at the event was the SADIE project, demonstrating immersive motion tracked transaural sound for interactive gaming.

11.2.16: Workshop on Virtual Reality Spatial Audio, AES Audio for Games Conference 2016

Gavin Kearney, AudioLab, Department of Electronics, University of York

Marcin Gorzel and Alper Gungormusler, Google Inc.

Pedro Corvo, Playstation VR, Sony Computer Entertainment Europe Ltd.

Jelle Van Mourik, Playstation VR, Sony Computer Entertainment Europe Ltd.

Varun Nair, Two Big Ears Ltd.

Dr. Gavin Kearney lead a panel of experts in this workshop which focused on rendering truly dynamic and spatially coherent mixes for VR. The panel presented practical workflows for mixing and rendering 3-D sound for VR and explored the challenges in delivering dynamic spatial audio over a variety of VR technologies and applications. Amongst the topics discussed were the new Unity based workflows for Google Cardboard, adapting existing game content to VR and working with cinematic VR in game engines. Dr. Kearney laid the foundation for the discussion by introducing Ambisonic workflows for binaural rendering in the context of the SADIE project. The AES Audio for Games conference took place on the 10th to 12th of February, 2016 at the Royal Society of Chemistry in the centre of London.

1.11.15: 139th Audio Engineering Society Convention

The 139th Audio Engineering Society Convention took place from October 29th to November 1st at the Jacob Javitts centre in New York. The convention brought together the world’s largest gathering of audio professionals for four days of audio technology, information, and networking as well as an academic conference. The SADIE project two papers at the convention: 'A HRTF Database for Virtual Loudspeaker Rendering' and 'Height Perception in Ambisonic Based Binaural Decoding', both of which were well recieved by patrons of the convention. Attendees also had the chance to listen to the SADIE binaural database at the poster presentations. The papers can be found in the Audio Engineering Society e-library via the publications page.

20.9.15: SADIE at ICSA, Graz

The 3rd International Conference on Spatial Audio was hosted by the Institute of Electronic Music and Acoustics (IEM) at the University of Music and Performing Arts, Graz, Austria between the 18th to 20th September, 2015. The conference provided a forum for Tonmeisters, engineers, and researchers to discuss challenges in spatial audio leading to novel insights and techniques. In particular, the conference brought together experts with practical and theoretical knowledge, which is crucial to ensure continuous learning, innovation and improvement in the field of spatial audio. The SADIE project presented two papers at the conference: 'A Virtual Loudspeaker Database for Ambisonics Research' and 'On Prediction of Auditory Height in Ambisonics'. The papers can be downloaded from the VDT website via the SADIE publications page.

20.5.15: BBC, Sound: Now & Next

On 19th and 20th May, 2015 BBC's Broadcast house was home to a two day event on the future of broadcast audio technology. Sound: Now

& Next featured talks from audio producers, artists and engineers as well as a technology fair

demonstrating the latest developments in audio technology. The University of York's Audiolab

were present as part of the BBC Audio Research Partnership. The team demonstrated recent work on

on a virtual version of York Theatre Royal. The project allows viewers to experience 360 degree visuals and

auralisations based on acoustic measurements and multicamera recordings of performances in the theatre and includes

interactive binaural rendering developed for the SADIE project.

25.03.15: ICMEM, Sheffield

The 3rd International Conference on the Multimodal Experience (ICMEM) of Music was held at The University of Sheffield on the 23rd-25th March. ICMEM brought together researchers from various disciplines who investigate the multimodality of musical experiences from different perspectives. Dr. Jude Brereton and Dr. Gavin Kearney from the University of York presented a demonstration of the Virtual Singing Studio, developed by Dr. Brereton, which is a loudspeaker based room acoustics simulation for real-time musical performance. Participants at the conference were able to transport themselves to an immersive interactive simulation of the National Centre for Early Music (York) and were able to experience the same visual and acoustic cues as they would in the real venue. Users could also acoustically interact with the virtual space, demonstrating the ways in which immersive VR can be used as a rehearsal tool for musicians in preparation for performances in real (or remote) spaces. The demonstrated version of the Virtual Singing Studio utilised an Ambisonic engine developed for the SADIE project.