Confident Safety Integration for Cobots (CSI:Cobot)

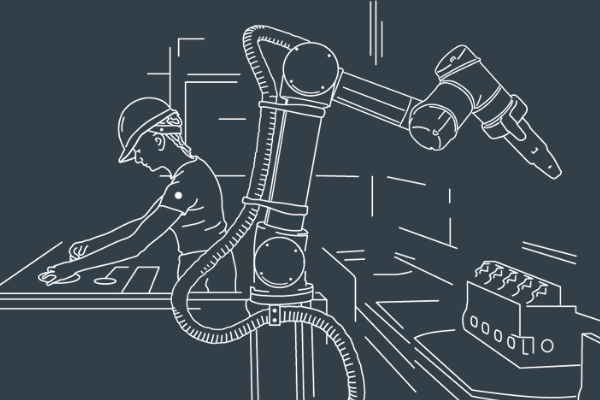

Removing the cage and curtains: how can we assure the safety of cobots to support increased productivity in manufacturing?

The CSI:Cobot project demonstrated how research in the fields of safety engineering, machine learning, and cybersecurity can be applied to human-robot collaborative processes to address safety concerns and improve confidence. The project was undertaken in collaboration with regulators, standards communities, and industrial stakeholders, to ensure relevance to future industrial application.

Contact us

Assuring Autonomy International Programme

assuring-autonomy@york.ac.uk

+44 (0)1904 325345

Institute for Safe Autonomy, University of York, Deramore Lane, York YO10 5GH

Project report

The full project report report summarises the approaches and findings of the CSI:Cobot project

The challenge

Safety and trust issues hinder the deployment of collaborative robots (cobots) in manufacturing. This project considered how novel safety techniques can be applied to build confidence in the deployment of uncaged cobot systems operating in spaces shared with humans and how such increasingly mobile systems could be regulated.

The research

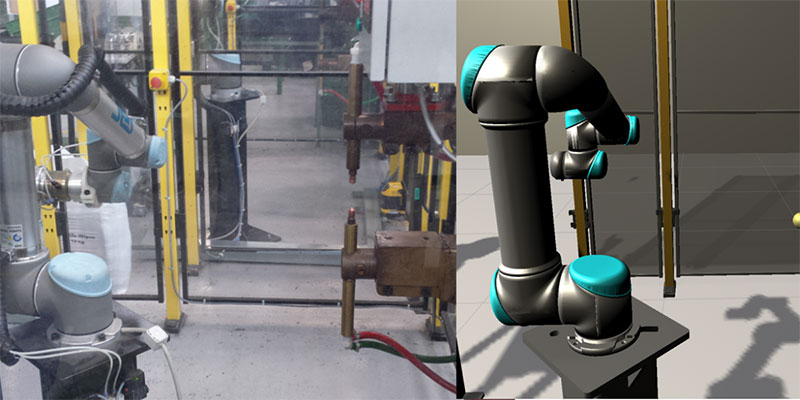

The project used two case studies, placing specific emphasis on digital twins for safety analysis, machine learning for vision-based proximity detection, synthesis of safety controllers, testing approaches for analysis of hazards, and security policy, user authentication, and intrusion detection.

In the last phase of the project, the team worked with the Health and Safety Executive to move their methods towards industrial application and shape regulatory change in relation to the use of novel approaches to robot safety.

The results

The key technical outcomes of the project are:

- development of a modular digital twinning framework for robotics, including tools for safety analysis and visualisation

- an approach to visual detection and tracking of humans and robots using Region Based Convolutional Neural Networks

- stochastic modelling and controller synthesis methods to enable dynamic switching between safe robot operation modes

- application of manual (STPA) and semi-automated (SASSI) techniques to analyse hazards in the system and validate safety controllers

- threat modelling and identification of security policy/requirements for collaborative systems.

- development of methods for intrusion detection and continuous user authentication

- virtual and physical demonstrations of collaborative robot safety techniques

Additionally, through engagement with the HSE (the regulator for industrial robot safety), the project increased understanding of research practice within the HSE , sharing of information to support future regulatory decision making, and shaping of research developments toward regulatory approval.

The project team also ran a three-day Manufacturing Robotics Challenge, including training in safety assurance and robotics, for 38 early-career researchers based in 11 countries.

How can we help regulatory organisations keep pace with technology developments?

- 1.2.1 practical guidance for 'considering human-machine interactions'

- 1.4 - practical guidance for 'impact of security on safety' (threat modelling)

- 1.4 - practical guidance for 'impact of security on safety' (policy document)

- 2.2.4.3 practical guidance for 'verification of deciding requirements'

- 2.6.1 practical guidance for 'monitoring RAS operation'

- 2.7 - practical guidance for 'using simulation'

- Gleirscher, M., Calinescu, R., Douthwaite, J., Lesage, B., Paterson, C., Aitken, J., Alexander, R., and Law, J. "Verified synthesis of optimal safety controllers for human-robot collaboration" in Science of Computer Programming (April 2022).

- Eimontaite, I., Cameron, D., Rolph, J., Mokaram, S., Aitken, J.M., Gwilt, I., and Law, J. (2022). “Dynamic graphical instructions result in improved attitudes and decreased task completion time in human–robot co-working: An experimental manufacturing study”. Sustainability, 14,3289.

- Zhang, J., Wang, S., Wang, P., Mihaylova, L., and Law, J. “A vision data repository for human-UR10 robot interactions in manufacturing” (2022).

- Wang, S., Zhang, J., Wang, P., and Mihaylova, L. “Semi-automated labelme, a deep learning based annotation tool” (2022).

- Douthwaite, A., Lesage, B., Gleirscher, M., Calinescu, R., Aitken, J.M., Alexander, R., and Law, J. "A modular digital twinning framework for safety assurance of collaborative robotics" in Frontiers in Robotics and AI, December 2021.

- Almohamade, S. S., Clark, J. A., and Law, J. "Mimicry attacks against behavioural-based user authentication for human-robot interaction" at 4th International Workshop on Emerging Technologies for Authorization and Authentication (ETAA), October 2021.

- Lesage, BMJ-R., and Alexander, R. "SASSI: Safety analysis using simulation-based situation coverage for cobot systems" in proceedings of SafeComp 2021.

- Lesage, B., and Alexander, R. “Situation space description, prototype testing simulation, and evaluation (PDF

, 598kb)”

, 598kb)” - Gleirscher, M. "Yap: Tool Support for Deriving Safety Controllers from Hazard Analysis and Risk Assessments", in Luckuck, M. & Farrell, M. (Eds.), Formal Methods for Autonomous Systems (FMAS), 2nd Workshop, Electronic Proceedings in Theoretical Computer Science, 329, 31-47. Open Publishing Association, 2020.

- Gleirscher, M., Johnson, N., Karachristou, P., Calinescu, R., Law, J., and Clark, J. "Challenges in the Safety-Security Co-Assurance of Collaborative Industrial Robots". To appear in Industrial Human-Robot Collaboration, edited by S. Fletcher and I. Ferreira.

- Gleirscher, M. and Calinescu, R. "Safety controller synthesis for collaborative robots" in Engineering of Complex Computer Systems, 25th International Conference, 28 - 31 October 2020, Singapore, 2020.

- Foster, S., Gleirscher, M. and Calinescu, R. "Towards deductive verification of control algorithms for autonomous marine vehicles" in Engineering of Complex Computer Systems, 25th International Conference, Singapore, 2020.

Project partners

And for the second phase of the project working with:

Contact us

Assuring Autonomy International Programme

assuring-autonomy@york.ac.uk

+44 (0)1904 325345

Institute for Safe Autonomy, University of York, Deramore Lane, York YO10 5GH