1.2.1 Considering human/machine interactions

Practical guidance - automotive

Authors: Erfan Pakdamanian, Shili Sheng, Sonia Baee, Seongkook Heo, Sarit Kraus, Lu Feng (Safe-SCAD demonstrator project)

In Level 3 of autonomy (i.e. conditionally automated driving), as defined by the Society of Automotive Engineers (SAE international [1]), the driver does not need to continuously monitor the driving environment. Nevertheless, due to current technology limitations and legal restrictions, automated vehicles (AVs) may still need to hand over the control back to drivers occasionally (e.g. under challenging driving conditions beyond the automated system's capabilities) [2].

In such cases, AVs would initiate takeover requests (TORs) and alert drivers via auditory, visual, or vibrotactile modalities [3, 4, 5] so that the drivers can resume manual driving in a timely manner. However, there are challenges in making drivers safely take over control. Drivers may need a longer time to shift their attention back to driving in some situations, such as when they have been involved in non-driving related tasks (NDRTs) for a prolonged time [6] or when they are stressed or tired [7].

Even if TORs are initiated with enough time for a driver to react, it does not guarantee that the driver will safely take over [8]. Besides, frequent alarms could startle and increase driver stress levels leading to detrimental user experience in AVs [9, 10, 11]. These challenges denote the need for AVs to constantly monitor and predict driver behaviour and adapt the systems accordingly to ensure a safe takeover.

The vast majority of prior work on driver takeover behaviour has focused on the empirical analysis of high-level relationships between the factors influencing takeover time and quality (e.g. [12, 13, 14]). More recently, the prediction of driver takeover behaviour using machine learning approaches has been drawing increasing attention. However, only a few studies have focused on the prediction of either takeover time [15, 16] or takeover quality [17, 18]; and their obtained accuracy results (ranging from 61% to 79%) are insufficient for the practical implementation of real-world applications. This is partly due to the fact that takeover prediction involves a wide variety of factors (e.g. drivers' cognitive and physical states, vehicle states, and the contextual environment) that could influence drivers' takeover behaviour.

To address the aforementioned challenges, we propose the DeepTake framework by providing reliable predictions of multiple aspects of takeover behaviour:

- takeover intention - whether the driver would respond to a TOR;

- takeover time - how long it takes for the driver to resume manual driving after a TOR;

- takeover quality - the quality of driver intervention after resuming manual control.

Impacts on future of AVs

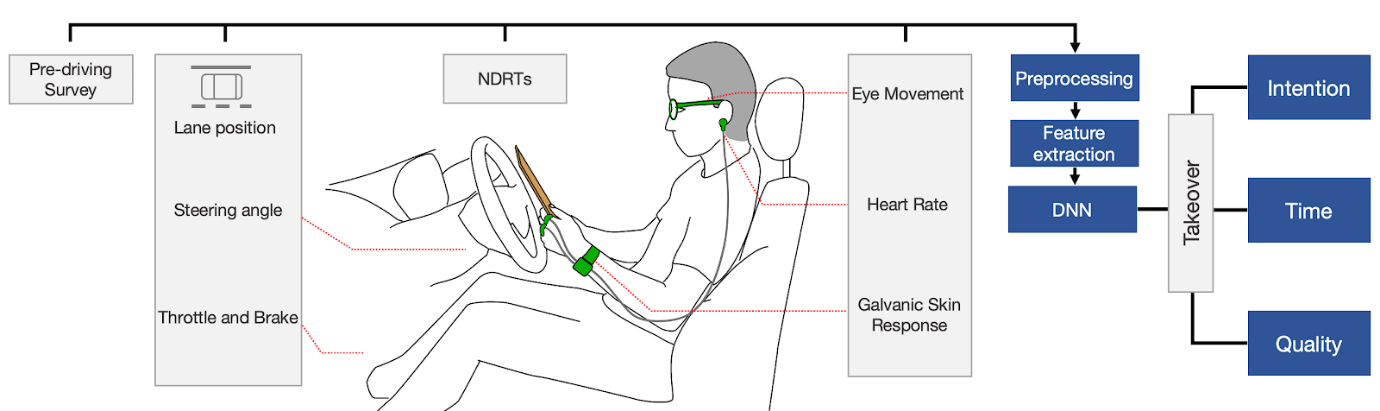

The intersection between ubiquitous computing, sensing and emerging technologies offers promising avenues for the DeepTake framework to integrate modalities into a novel human-centred framework to increase the robustness of drivers’ takeover behaviour prediction. DeepTake is a unified framework for the prediction of driver takeover behaviour in three aspects (see Figure 1). We envision that DeepTake can be integrated into future AVs, such that the automated systems can make optimal decisions based on the predicted driver takeover behaviour. For example, if the predicted takeover time exceeds the duration that the vehicle can detect situations requiring TORs, or the predicted takeover quality is too low to respond to TORs, the automated systems can warn the driver to engage less with the NDRT.

Activities

Figure 1 illustrates an overview of DeepTake framework. We first collected multimodal data such as driver biometrics, pre-driving survey, types of engagement in NDRTs, and vehicle data. The multitude of sensing modalities and data streams offers various and complementary means to collect data that will help to obtain a more accurate and robust prediction of drivers’ takeover behaviour. Second, the collected multimodal data are pre-processed followed by segmentation and feature extraction. The extracted features are then labelled based on the belonging takeover behaviour class. In our framework, we define each aspect of takeover behaviour as a classification problem (i.e. takeover intention as a binary class whereas takeover time and quality as three multi-classes). Finally, we built DNN-based predictive models for each aspect of takeover behaviour. DeepTake takeover predictions can potentially enable the vehicle autonomy to adjust the timely initiation of TORs to match drivers’ needs and ultimately improve safety.

Figure 1 - DeepTake uses data from multiple sources (pre-driving survey, vehicle data, NDRTs information, and driver biometrics) and feeds the pre-processed extracted features into deep neural network models for the prediction of takeover intention, time and quality.

Below, we discuss each aforementioned step used in developing DeepTake framework.

1. Data collection: we collect multimodal data such as driver biometrics, pre-driving surveys, types of engagement in NDRTs, and vehicle data. Collecting multimodal data copes with the main drawback and inability to provide the underlying complicated state of the driver. As driving is a dynamic task and could be impacted by internal and external factors, multiple physiological data streams were used. However, DeepTake can be adjusted to fit the data entry.

2. Pre-processing: the collected multimodal data are pre-processed followed by segmentation and feature extraction. Due to sensitiveness of physiological wearables, intensive pre-processing should be applied to remove motion artifacts and extract meaningful information. The extracted features are then labelled based on the belonging to takeover behaviour class.

3. Labelling: DeepTake tends to cover multiple aspects of takeover behaviour to provide more reliable outcomes. We define each aspect of takeover behaviour as a multi-class classification problem (i.e. takeover intention as a binary class whereas takeover time and quality as three multi-classes). Thus, we labelled takeover time as the period from the moment the takeover request alarm is triggered to the moment a participant initiates regaining control by pressing the two embedded buttons on the steering. This period defines the takeover time for each participant which categorised as Low, Medium, and High. In addition, we consider a motivating scenario where the driver needs to take over control of the vehicle and swerve away from an obstacle blocking the same lane; meanwhile, the vehicle should not deviate too much from the current lane, risking crashing into nearby traffic. Thus, takeover quality (𝑃) was labelled as the lateral deviation from the current lane. we label the feature vectors into three classes of takeover quality: “low” or staying in a lane when 𝑃<3.5𝑚, “medium” or manoeuvre the obstacle but too much deviations when 7𝑚 < 𝑃 ≤ 10𝑚, or “high” or manoeuvre safely and one lane deviates when 3.5 ≤ 𝑃 ≤ 7𝑚.

4. Modeling: DeepTake utilises a feed-forward deep neural network (DNN) with a mini-batch stochastic gradient descent. The DNN model architecture begins with an input layer to match the input features, and each layer receives the input values from the prior layer and outputs to the next one. Although we used three classes of takeover time and quality, the output layer of DNN model can be customised for the multi-class classification. In fact, we also demonstrated the capability of DeepTake in predicting 5-class takeover time. We evaluate the DNN-model performance against multiple state-of-art models.

5. Evaluation metrics: There are a number of potential methods to address reliability of the models. Each of these methods shows a different aspect of the model. We evaluate the performance of DeepTake framework by multiple metrics. Applying different metrics reflect the goodness of the proposed model in different aspects. We first apply 10-fold cross-validation on training data to evaluate the performance of selected features in the prediction of driver takeover intention, time and quality. We then compared the proposed model against 6 other models using Receiver Operating Characteristic (ROC), and weighted F1 scores. We finally use the confusion matrix to further illustrate the summary of DeepTake’s performance on the distinction of takeover intention, time, and quality per class.

Methodology

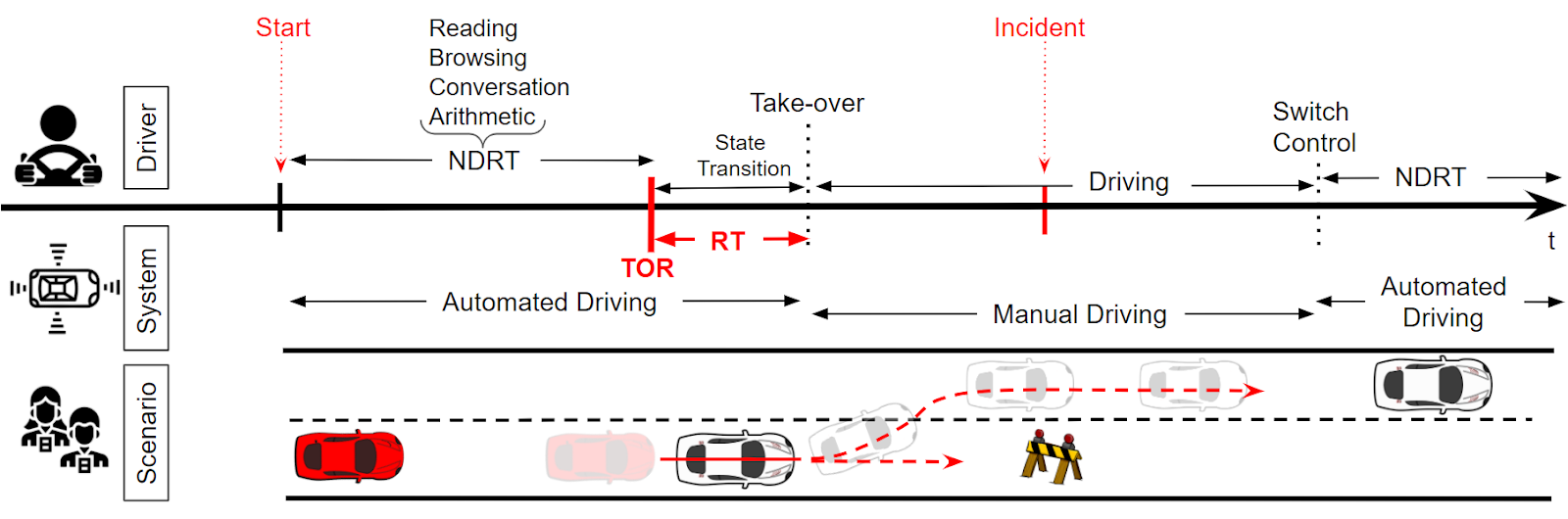

Driving scenarios: An experiment was conducted to identify the link between the captured data and takeover behaviour. The driving scenarios comprised a four-lane rural highway, with various trees and houses placed alongside the roadway. We designed five representative situations where the AVs may need to prompt a TOR to the driver, including novel and unfamiliar incidents that appear on the same lane. Figure 2 displays an example of a takeover situation used in our study. The designed unplanned takeovers let participants react more naturally to what they would normally do in AVs, participants' reaction times are in detectable categories. In other words, participants have no previous knowledge of incident appearance, which might happen among other incidents requiring situational awareness and decision-making.

Figure 2 - An example of a takeover situation used in the study

Means to address takeover readiness: We believe that our human-centred DeepTake framework makes a step towards enabling a longer interaction with NDRTs for automated driving. DeepTake provides a new approach to help the monitoring systems to constantly observe and predict the driver's mental and physical status by which the automated system can make optimal decisions and improve the safety and user experience in AVs. Specifically, by integrating the DeepTake framework into the monitoring systems of AVs, the automated system infers when the driver has the intention to takeover through multiple sensor streams. Once the system confirms a strong possibility of takeover intention, it can adapt its driving behaviour to match the driver's needs for acceptable and safe takeover time and quality. Therefore, a receiver of TOR can be ascertained as having the capability to take over properly, otherwise, the system would have allowed the continued engagement in NDRT or warned about it. Thus, integration of DeepTake into the future design of AVs facilitates the human and system interaction to be more natural, efficient and safe. Since DeepTake should be used in safety-critical applications, we further validated it to ensure that it meets important safety requirements.

The DeepTake framework provides a promising new direction for modelling driver takeover behaviour to lessen the effect of the general and fixed design of TORs which generally considers homogeneous takeover time for all drivers. This is grounded in the design of higher user acceptance of AVs and dynamic feedback. The information obtained by DeepTake can be conveyed to passengers as well as other vehicles letting their movement decisions have a higher degree of situational awareness.

References

- [1] SAE On-Road Automated Vehicle Standards Committee et al. 2018. Taxonomy and definitions for terms related to driving automation systems for on-road motor vehicles. SAE International: Warrendale, PA, USA (2018).

- [2] Rod McCall, Fintan McGee, Alexander Mirnig, Alexander Meschtscherjakov, Nicolas Louveton, Thomas Engel, and Manfred Tscheligi. 2019. A taxonomy of autonomous vehicle handover situations. Transportation research part A: policy and practice 124 (2019), 507–522.

- [3] Frederik Naujoks, Christoph Mai, and Alexandra Neukum. 2014. The effect of urgency of take-over requests during highly automated driving under distraction conditions. Advances in Human Aspects of Transportation 7, Part I (2014), 431.

- [4] Erfan Pakdamanian, Lu Feng, and Inki Kim. 2018. The effect of whole-body haptic feedback on driver’s perception in negotiating a curve. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Vol. 62. SAGE Publications Sage CA: Los Angeles, CA, 19–23.

- [5] Jingyan Wan and Changxu Wu. 2018. The effects of vibration pattern of take-over request and non-driving tasks on taking-over control of automated vehicles. International Journal of Human–Computer Interaction 34, 11 (2018), 987–998.

- [6] Kathrin Zeeb, Manuela Härtel, Axel Buchner, and Michael Schrauf. 2017. Why is steering not the same as braking? The impact of non-driving related tasks on lateral and longitudinal driver interventions during conditionally automated driving. Transportation research part F: traffic psychology and behaviour 50 (2017), 65–79.

- [7] Anna Feldhütter, Dominik Kroll, and Klaus Bengler. 2018. Wake up and take over! The effect of fatigue on the take-over performance in conditionally automated driving. In 2018 21st International Conference on Intelligent Transportation Systems (ITSC) . IEEE, 2080–2085.

- [8] Anthony D. McDonald, Hananeh Alambeigi, Johan Engström, Gustav Markkula, Tobias Vogelpohl, Jarrett Dunne, and Norbert Yuma. 2019. Toward computational simulations of behavior during automated driving takeovers: a review of the empirical and modeling literatures. Human factors 61, 4 (2019), 642–688.

- [9] Moritz Körber, Lorenz Prasch, and Klaus Bengler. 2018. Why do I have to drive now? Post hoc explanations of takeover requests. Human factors 60, 3 (2018), 305–323.

- [10] Erfan Pakdamanian, Nauder Namaky, Shili Sheng, Inki Kim, James Arthur Coan, and Lu Feng. 2020. Toward Minimum Startle After Take-Over Request: A Preliminary Study of Physiological Data. In 12th International Conference on Automotive User Interfaces and Interactive Vehicular Applications . 27–29.

- [11] Jiwon Lee and Ji Hyun Yang. 2020. Analysis of driver’s EEG given take-over alarm in SAE level 3 automated driving in a simulated environment. International journal of automotive technology 21, 3 (2020), 719–728.

- [12] Na Du, Feng Zhou, Elizabeth M Pulver, Dawn M Tilbury, Lionel P Robert, Anuj K Pradhan, and X Jessie Yang. 2020. Examining the effects of emotional valence and arousal on takeover performance in conditionally automated driving. Transportation research part C: emerging technologies 112 (2020), 78–87.

- [13] Brian Mok, Mishel Johns, David Miller, and Wendy Ju. 2017. Tunneled in: Drivers with active secondary tasks need more time to transition from automation. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems. 2840–2844.

- [14] Bo Zhang, Joost de Winter, Silvia Varotto, Riender Happee, and Marieke Martens. 2019. Determinants of take-over time from automated driving: A meta-analysis of 129 studies. Transportation research part F: traffic psychology and behaviour 64 (2019), 285–307.

- [15] Frauke L Berghöfer, Christian Purucker, Frederik Naujoks, Katharina Wiedemann, and Claus Marberger. 2018. Prediction of take-over time demand in conditionally automated driving-results of a real world driving study. In Proceedings of the Human Factors and Ergonomics Society Europe Chapter 2018 Annual Conference.69–81.

- [16] Alexander Lotz and Sarah Weissenberger. 2018. Predicting take-over times of truck drivers in conditional autonomous driving. In International Conference on Applied Human Factors and Ergonomics. Springer, 329–338.

- [17] Christian Braunagel, Wolfgang Rosenstiel, and Enkelejda Kasneci. 2017. Ready for take-over? A new driver assistance system for an automated classification of driver take-over readiness. IEEE Intelligent Transportation Systems Magazine 9, 4 (2017), 10–22.

- [18] Nachiket Deo and Mohan M Trivedi. 2019. Looking at the driver/rider in autonomous vehicles to predict take-over readiness. IEEE Transactions on Intelligent Vehicles (2019).

Contact us

Assuring Autonomy International Programme

assuring-autonomy@york.ac.uk

+44 (0)1904 325345

Institute for Safe Autonomy, University of York, Deramore Lane, York YO10 5GH

Related links

Download this guidance as a PDF:

Contact us

Assuring Autonomy International Programme

assuring-autonomy@york.ac.uk

+44 (0)1904 325345

Institute for Safe Autonomy, University of York, Deramore Lane, York YO10 5GH

Related links

Download this guidance as a PDF: